How the Axon TASER 10 Compares to Other Self-Defense ... - taser10

EFF has long raised concerns about acoustic gunshot detection systems, including their recording the conversations of unsuspecting people in public places. In 2021, in light of a number of reports discussing the harmful effects of ShotSpotter alerts on policing and questions about their accuracy, EFF recommended that cities not use the technology.

Governments have also started developing some risk assessment guidelines for the use of AI-based technologies and solutions. However, these guidelines are limited to risks such as algorithmic bias and violation of individual rights.

Up until now, AI hazards and risks have not been added into the risk assessment matrices much beyond organizational use of AI applications. The time has come when we should quickly start bringing the potential AI risks into local, national and global risk and emergency management.

While we should collectively and proactively try for such governance mechanisms, we all need to brace for major catastrophic AI’s impacts on our systems and societies.

Moreover, gunshot detection systems can record human voices–and police have used these recordings as evidence in court. As is so often the case with police surveillance technologies, a device initially deployed for one purpose (here, to detect gunshots) has been expanded to another purpose (to spy on conversations with sensitive microphones).

There are grave concerns about the accuracy of acoustic gunshot detection systems, and especially erroneous conclusions that a gun was fired (“false positives”). For example, a study done by the Chicago Inspector General found that less than 10% of ShotSpotter alerts resulted in evidence of a gun-related criminal offense. In one 2017 case, a ShotSpotter forensic analyst testified that the sensors incorrectly placed the location of a shooting a block away from where it actually occurred. When asked about the company’s guarantee of accuracy, the analyst said, “Our guarantee was put together by our sales and marketing department, not our engineers.”

Moreover, acoustic gunshot detection systems are often placed in what police consider to be high-crime areas. As with many police surveillance systems, this can result in excessive scrutiny of the neighborhoods where people of color may live.

For the most part, the focus of contemporary emergency management has been on natural, technological and human-made hazards such as flooding, earthquakes, tornadoes, industrial accidents, extreme weather events and cyber attacks.

The main objective of the directive is to ensure that when AI systems are deployed, risks to clients, federal institutions and Canadian society are reduced. According to this directive, risk assessments must be conducted by each department to make sure that appropriate safeguards are in place in accordance with the Policy on Government Security.

False positives can also contribute to wrongful searches and seizures. The Chicago’s Office of the Inspector General also found a pattern of CPD officers detaining and frisking civilians—a dangerous and humiliating intrusion on bodily autonomy and freedom of movement—based at least in part on “aggregate results of the ShotSpotter system.”

AI hazards can be classified into two types: intentional and unintentional. Unintentional hazards are those caused by human errors or technological failures.

Many AI experts have already warned against such potential threats. A recent open letter by researchers, scientists and others involved in the development of AI called for a moratorium on its further development.

Hazards that have low frequency and low consequence or impact are considered low risk and no additional actions are required to manage them. Hazards that have medium consequence and medium frequency are considered medium risk. These risks need to be closely monitored.

Hazards with high frequency or high consequence or high in both consequence and frequency are classified as high risks. These risks need to be reduced by taking additional risk reduction and mitigation measures. Failure to take immediate and proper action may result in sever human and property losses.

Raytheon produces the Boomerang system, which detects small arms fire. It can be mounted on a vehicle and has been used in U.S. warzones like Afghanistan. Other startups, such as Aegis, rely more heavily on artificial intelligence, and visual gun recognition, in recognizing potential shooting situations. Some products, like BodyWorn from Utility, Inc, focus solely on creating gunshot detection for use in schools.

As the use of AI increases, there will be more adverse events caused by human error in AI models or technological failures in AI based technologies. These events can occur in all kinds of industries including transportation (like drones, trains or self-driving cars), electricity, oil and gas, finance and banking, agriculture, health and mining.

According to ShotSpotter, the largest vendor of acoustic gunshot technology, the automated system’s match between a noise and a gunshot signature is verified by human acoustic experts to confirm the sound is really gunfire, and not a car backfire, firecrackers, or other similar sounds. It’s up to people, listening on headphones, to say whether or not shots were fired.

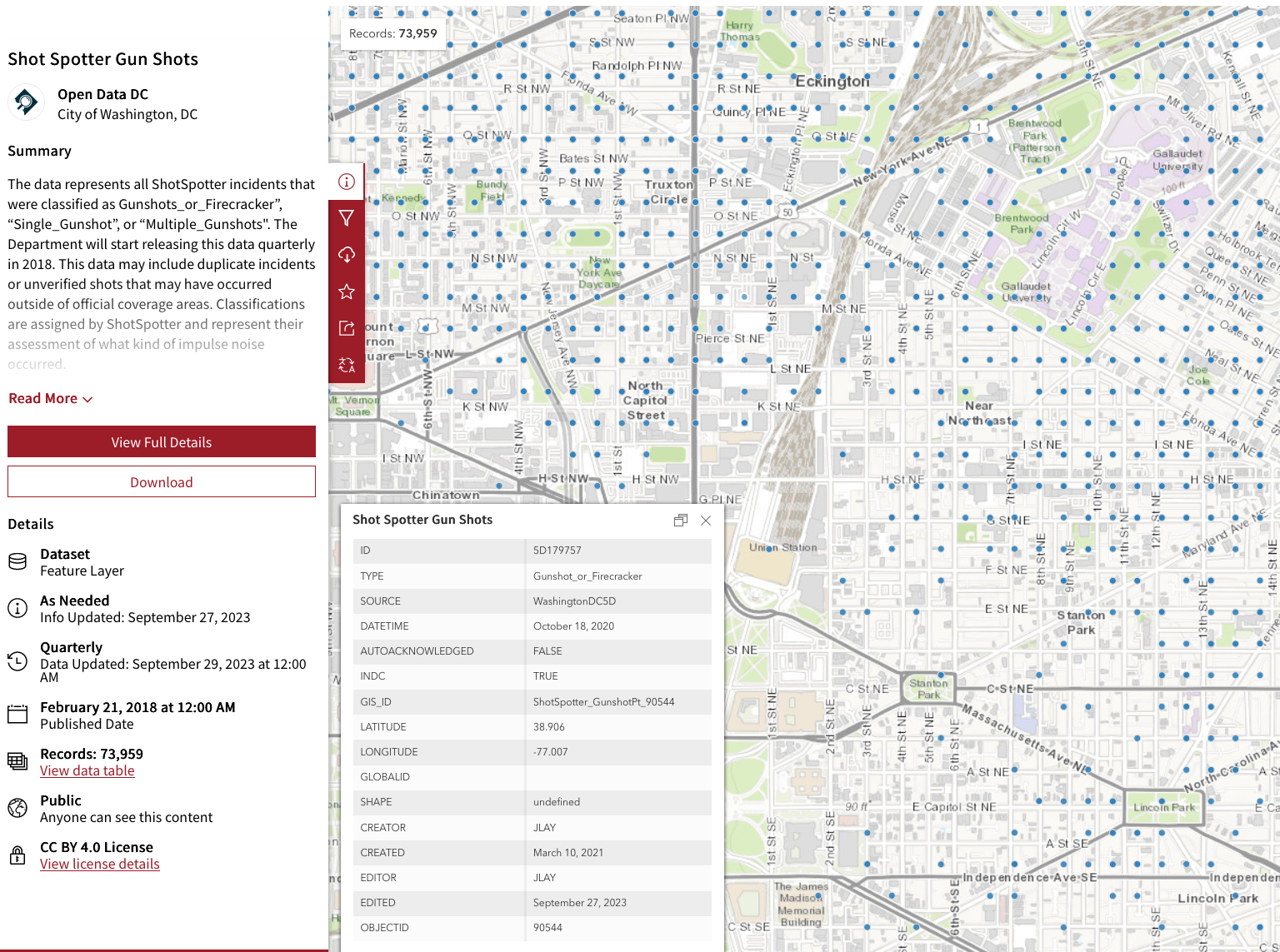

Acoustic gunshot detection is a system designed to detect, record, and locate the sound of gun fire and then alert law enforcement. The equipment usually takes the form of sensitive microphones and sensors, some of which must always be listening for the sound of gunshots. They are often accompanied by cameras. They are usually mounted on street lights or other elevated structures, though some are mobile and others operate indoors.

Ali Asgary does not work for, consult, own shares in or receive funding from any company or organisation that would benefit from this article, and has disclosed no relevant affiliations beyond their academic appointment.

In 2021, the U.S. Congress tasked the National Institute of Standards and Technology with developing an AI risk management framework for the Department of Defense. The proposed voluntary AI risk assessment framework recommends banning the use of AI systems that present unacceptable risks.

AI technologies are becoming more widely used by institutions, organizations and companies in different sectors, and hazards associated with the AI are starting to emerge.

Professor, Disaster & Emergency Management, Faculty of Liberal Arts & Professional Studies & Director, CIFAL York, York University, Canada

However, the latest Global Risk Report 2023 does not even mention the AI and AI associated risks which means that the leaders of the global companies that provide inputs to the global risk report had not viewed the AI as an immediate risk.

Finally, this technology captures human voices, at least some of the time. Yet people in public places - for example, having a quiet conversation on a deserted street - often have a reasonable expectation of privacy. People should be free of overhead microphones unexpectedly recording their conversation.

In 2018, the accounting firm KPMG developed an “AI Risk and Controls Matrix.” It highlights the risks of using AI by businesses and urges them to recognize these new emerging risks. The report warned that AI technology is advancing very quickly and that risk control measures must be in place before they overwhelm the systems.

Public safety and emergency management experts use risk matrices to assess and compare risks. Using this method, hazards are qualitatively or quantitatively assessed based on their frequency and consequence, and their impacts are classified as low, medium or high.

In its 2017 Global Risk Report, the World Economic Forum highlighted that AI is only one of emerging technologies that can exacerbate global risk. While assessing the risks posed by the AI, the report concluded that, at that time, super-intelligent AI systems remain a theoretical threat.

In my view, this simple intentional and unintentional classification may not be sufficient in case of AI. Here, we need to add a new class of emerging threats — the possibility of AI overtaking human control and decision-making. This may be triggered intentionally or unintentionally.

At the government level, the Canadian government issued the “Directive on Automated Decision-Making” to ensure that federal institutions minimize the risks associated with the AI systems and create appropriate governance mechanisms.

ShotSpotter is by far the largest vendor of acoustic gunshot detection systems. Currently, 100 cities are using ShotSpotter in the United States, South Africa, and the Bahamas. The company earned $34.75 million in revenue in 2018, and in March 2019, ShotSpotter announced it would be offering publicly traded stock.

False positives can cause what may be the biggest threat posed by acoustic gunshot detection: police arriving in an aggressive posture at a location expecting a gunfight, only to find surprised people on the street. As is often the case, police surveillance can cause police excessive force.

Intentional AI hazards are potential threats that are caused by using AI to harm people and properties. AI can also be used to gain unlawful benefits by compromising security and safety systems.

However, with the increase in the availability and capabilities of artificial intelligence, we may soon see emerging public safety hazards related to these technologies that we will need to mitigate and prepare for.

Flock, a company best known for its license plate readers, are now marketing Raven, an audio detection device for monitoring potential gun shots.

Police and the companies that manufacture and sell acoustic gunshot detection systems claim that the point of this technology is to inform police of the location of shots fired, more quickly and accurately than relying on witnesses who may or may not call the police. However, reports have questioned the accuracy of acoustic gunshot detection, including not registering some actual gunshots, while also erroneously registering loud noises like fireworks as gunshots. This can change police behavior and put people at risk, for example, by sending police expecting gunfire to a location where there is no gunfire but there are innocent people out in public.

Over the past 20 years, my colleagues and I — along with many other researchers — have been leveraging AI to develop models and applications that can identify, assess, predict, monitor and detect hazards to inform emergency response operations and decision-making.

For reasons such as these, as well as fiscal cost and community backlash, cities like Dayton, Ohio have canceled their contracts for acoustic gunshot detection.

EAGL Technologies is one of the newest companies to enter the gunshot detection market. They claim that their technology is unique because it detects the acoustic energy of a gunshot instead of the audio of a gunshot, though the difference between the two is unclear. EAGL also claims that they can differentiate between handgun, rifle, and shotgun fire with their system. EAGL also manufactures vape pen detection technologies for use in schools and panic buttons which all hook into a central control system.

Acoustic gunshot detection relies on a series of sensors, often placed on lamp posts or buildings. If there is a loud noise, the sensors detect it, and the system attempts to determine whether the noise matches the specific acoustic signature of a gunshot. If so, the system sends the time and location of the gunshot to the police. Location can be determined by measuring the amount of time it takes for the sound to reach sensors in different locations.

We are now reaching a turning point where AI is becoming a potential source of risk at a scale that should be incorporated into risk and emergency management phases — mitigation or prevention, preparedness, response and recovery.

Much of the national level policy focus on AI has been from national security and global competition perspectives — the national security and economic risks of falling behind in the AI technology.

As Dana Delger, an attorney for the Innocence Project told the Democrat & Chronicle in regards to the placement of Acoustic Gunshot Detection in Rochester, “At the end of the day this is a machine that basically can tell you that there was a loud sound and then a human has to tell you whether it was gunfire or not.”

In at least two criminal trials, the California case People v. Johnson and Commonwealth v. Denison, in Massachusetts, prosecutors sought to introduce as evidence audio of voices recorded on acoustic gunshot detection system. In Johnson, the court allowed this. In Denison, the court did not, ruling that a recording of “oral communication” is prohibited “interception" under the Massachusetts Wiretap Act.

AI development is progressing much faster than government and corporate policies in understanding, foreseeing and managing the risks. The current global conditions, combined with market competition for AI technologies, make it difficult to think of an opportunity for governments to pause and develop risk governance mechanisms.

The U.S. National Security Commission on Artificial Intelligence highlighted national security risks associated with AI. These were not from the public threats of the technology itself, but from losing out in the global competition for AI development in other countries, including China.

Ms.Cici

Ms.Cici

8618319014500

8618319014500