Magnifying Glasses with light (Kit) - magnivision magnifying glass

Use Microlens Arrays to transform a round collimated beam into a rectangular homogeneous spot.See the Application tab for details.

We launched the AI Hardware Center in 2019 to fill this void, with the mission to improve AI hardware efficiency by 2.5 times each year. By 2029, our goal is train and run AI models one thousand times faster than we could three years ago.

There’s just one problem. We’re running out of computing power. AI models are growing exponentially, but the hardware to train these behemoths and run them on servers in the cloud or on edge devices like smartphones and sensors hasn’t advanced as quickly. That’s why the IBM Research AI Hardware Center decided to create a specialized computer chip for AI. We’re calling it an Artificial Intelligence Unit, or AIU.

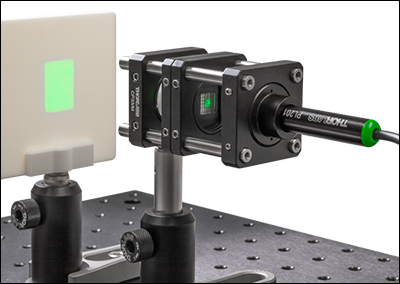

The application photo to the right shows one possible fly's eye homogenizer configuration mounted in a 30 mm cage system. The light source is a PL201 compact laser module fixed in an AD11F SM1-threaded adapter held in a CP33(/M) cage plate. Alternatively, other light sources can be used such as a fiber-coupled LED connected to a collimator or mounted LED with a collimation adapter. The light passes through the first of two MLAs held in CP6T cage plates. These cage plates are thin enough to hold the mounted MLAs and secure them with a retaining ring while allowing the user to place the MLAs as close as possible to each other to maximize the rectangular output size. The lensed side of the first MLA should face the source. It is key to accurately align the rotation of the MLAs relative to each other; there will be artifacts on screen otherwise. The light then passes through an LB1471 N-BK7 bi-convex lens to produce a rectangular 9 mm x 12 mm area on an EDU-VS2(/M) screen, as seen in the photo to the right. A similar setup can be produced using our Fly's Eye Homogenizer devices as seen here.

These PMMA Microlens Arrays (MLAs) are available mounted or unmounted. MLAs are excellent for homogenizing and shaping light, yielding a flat top profile output or square spot patterns. PMMA has excellent UV resistance, filtering out UV light less than 300 nm. The microlenses have a plano-convex shape and are arranged in a 10.0 mm by 9.8 mm rectangular grid with a lens pitch of 1.0 mm by 1.4 mm. These can be used in fly's eye homogenizer configurations, where a round beam is transformed into a rectangular spot (see the Application tab for details). Alternatively, Thorlabs offers a line of Fly's Eye Homogenizer devices using these MLAs with 40 mm or 95 mm working distances.

Please note that the output rectangle is only sharply defined at a specific distance from the focusing lens. The actual size of the rectangle can be adjusted by changing the distance between the two MLAs. Decreasing the distance will increase the size of the rectangle and increasing the distance will make the rectangle smaller. Smaller rectangle sizes can also be produced by using lenses with shorter focal lengths. A simplified diagram of the setup is shown on the bottom right.

For the mounted versions, the MLA is glued into a Ø1", 4.0 mm thick mount that is externally SM1-threaded (1.035"-40). The aperture of the lens window is 9.0 mm by 9.0 mm. The plate has slots that are compatible with a SPW602, SPW606, SPW801, or SPW909 spanner wrench. Their unmounted counterparts are most easily held using one of our cylindrical lens mounts, which are specifically designed to hold square or rectangular optics.

With a technique pioneered by IBM called approximate computing, we can drop from 32-bit floating point arithmetic to bit-formats holding a quarter as much information. This simplified format dramatically cuts the amount of number crunching needed to train and run an AI model, without sacrificing accuracy.

A car with a gasoline engine might be able to run on diesel but if maximizing speed and efficiency is the objective, you need the right fuel. The same principle applies to AI. For the last decade, we’ve run deep learning models on CPUs and GPUs — graphics processors designed to render images for video games — when what we really needed was an all-purpose chip optimized for the types of matrix and vector multiplication operations used for deep learning. At IBM, we’ve spent the last five years figuring out how to design a chip customized for the statistics of modern AI.

Leaner bit formats also reduce another drag on speed: moving data to and from memory. Our AIU uses a range of smaller bit formats, including both floating point and integer representations, to make running an AI model far less memory intensive. We leverage key IBM breakthroughs from the last five years to find the best tradeoff between speed and accuracy.

A decade ago, modern AI was born. A team of academic researchers showed that with millions of photos and days of brute force computation, a deep learning model could be trained to identify objects and animals in entirely new images. Today, deep learning has evolved from classifying pictures of cats and dogs to translating languages, detecting tumors in medical scans, and performing thousands of other time-saving tasks.

In this example, the setup was mounted using Ø1/2" posts and post holders that were secured to an MB3045(/M) breadboard using clamping forks.

One, embrace lower precision. An AI chip doesn’t have to be as ultra-precise as a CPU. We’re not calculating trajectories for landing a spacecraft on the moon or estimating the number of hairs on a cat. We’re making predictions and decisions that don’t require anything close to that granular resolution.

For microlens arrays that are ideal for use in custom-built Shack-Hartmann wavefront sensors, please visit the Fused Silica Microlens Arrays page.

It’s our first complete system-on-chip designed to run and train deep learning models faster and more efficiently than a general-purpose CPU.

The IBM AIU is what’s known as an application-specific integrated circuit (ASIC). It’s designed for deep learning and can be programmed to run any type of deep-learning task, whether that’s processing spoken language or words and images on a screen. Our complete system-on-chip features 32 processing cores and contains 23 billion transistors — roughly the same number packed into our z16 chip. The IBM AIU is also designed to be as easy-to-use as a graphics card. It can be plugged into any computer or server with a PCIe slot.

It’s our first complete system-on-chip designed to run and train deep learning models faster and more efficiently than a general-purpose CPU.

There are multiple ways to homogenize the output of a light source. Microlens arrays (MLAs) used in a fly's eye homogenizer configuration transform a round collimated beam with a Gaussian profile into a rectangular homogeneous area. The size of the rectangle is defined by the size and pitch of the microlenses.

AR Coating OptionsWhile our uncoated PMMA microlens arrays offer excellent performance, improved transmission can be gained by choosing a lens with an antireflective coating on both sides to reduce the surface reflections. Our broadband antireflection (BBAR) coatings provide <0.8% average reflectance over one of the following wavelength ranges: 420 - 700 nm or 650 - 1050 nm.

The workhorse of traditional computing — standard chips known as CPUs, or central processing units — were designed before the revolution in deep learning, a form of machine learning that makes predictions based on statistical patterns in big data sets. The flexibility and high precision of CPUs are well suited for general-purpose software applications. But those winning qualities put them at a disadvantage when it comes to training and running deep learning models which require massively parallel AI operations.

Two, an AI chip should be laid out to streamline AI workflows. Because most AI calculations involve matrix and vector multiplication, our chip architecture features a simpler layout than a multi-purpose CPU. The IBM AIU has also been designed to send data directly from one compute engine to the next, creating enormous energy savings.

This is not a chip we designed entirely from scratch. Rather, it’s the scaled version of an already proven AI accelerator built into our Telum chip. The 32 cores in the IBM AIU closely resemble the AI core embedded in the Telum chip that powers our latest IBM’s z16 system. (Telum uses transistors that are 7 nm in size while our AIU will feature faster, even smaller 5 nm transistors.)

Deploying AI to classify cats and dogs in photos is a fun academic exercise. But it won’t solve the pressing problems we face today. For AI to tackle the complexities of the real world — things like predicting the next Hurricane Ian, or whether we’re heading into a recession — we need enterprise-quality, industrial-scale hardware. Our AIU takes us one step closer. We hope to soon share news about its release.

The AI cores built into Telum, and now, our first specialized AI chip, are the product of the AI Hardware Center’s aggressive roadmap to grow IBM’s AI-computing firepower. Because of the time and expense involved in training and running deep-learning models, we’ve barely scratched the surface of what AI can deliver, especially for enterprise.

Please note that the Zemax file provided below is not the common sequential (SC) raytracing file which we typically provide, but the non-sequential (NSC) raytracing file. Be aware that the standard version of Zemax OpticStudio® does not support the NSC mode.

Ms.Cici

Ms.Cici

8618319014500

8618319014500