How Do Diffraction Grating Glasses Work? - how does diffraction grating work

Remote patient monitoring systems rely on cameras with an optimal FOV to provide accurate and complete observations of patients. These cameras ensure that all relevant movements and conditions are captured so that healthcare providers can monitor the health of patients. It leads to timely medical interventions and improved remote patient safety.

Opticalfilters HS Code

In this article, let’s explore the importance of FOV in embedded vision, the factors that determine FOV, as well as which applications rely on this the most.

Having 2 or more cameras enables a higher resolution, prevents lens distortion, and offers a wider FOV. To achieve high imaging quality in multi-camera systems, a lens having an FOV of around 60-70 degrees is usually chosen. But it is important to note that this is determined by a multitude of factors. There is no ‘one-size fits all’ approach to this. It is recommended to take the help from an imaging expert like e-con Systems as you go about picking the right field of view and lens for your application. Please feel free to write to us at camerasolutions@e-consystems.com if you need a helping hand.

Optical filterthorlabs

You also have an option to capture the same field of view with sensors of different size. This can be done using a lens with the appropriate focal length. As a result, the same FOV can be achieved using a small sensor with a short focal length lens and a large sensor with a long focal length lens.

Prabu is the Chief Technology Officer and Head of Camera Products at e-con Systems, and comes with a rich experience of more than 15 years in the embedded vision space. He brings to the table a deep knowledge in USB cameras, embedded vision cameras, vision algorithms and FPGAs. He has built 50+ camera solutions spanning various domains such as medical, industrial, agriculture, retail, biometrics, and more. He also comes with expertise in device driver development and BSP development. Currently, Prabu’s focus is to build smart camera solutions that power new age AI based applications.

e-con Systems has led from the front when it comes to innovation in embedded vision. And one of our key strengths is the platform side expertise especially on the NVIDIA Jetson series. Leveraging this, e-con has designed many multi-camera solutions that offer an FOV of up to 360 degrees.

Bandpassoptical filter

Many modern-day embedded vision systems utilize multiple types of lenses and sensors with different feature sets and varying costs. The design of the camera systems integrated with these components plays a huge role in achieving the required image quality.

Please write to us at camerasolutions@e-consystems.com if you need expert help integrating cameras with different FOVs into your applications.

Automated sports broadcasting systems use cameras with a wide FOV to cover entire fields or courts. Hence, all the movements within the sporting area are captured, which means viewers can experience the game in an immersive way. A wide FOV is also important for capturing aspects such as player movements and strategic plays, which enhances the overall broadcasting quality. Furthermore, wider FOV cameras streamline production by potentially replacing multiple conventional cameras, reducing setup complexity and personnel needs.

From the previous section, we understood the definition of FOV and its relation with several other lens parameters. Let us now discuss how to choose the right FOV for an embedded vision application.

Industrial automation systems for functions like quality inspection rely on cameras with accurate FOV settings to scrutinize products on assembly lines. They capture imaging data required for thorough product inspection by detecting defects instantly. Moreover, manufacturers can optimize their inspection processes, reduce errors, and maintain consistent product standards.

Types ofopticalfilters

Let’s look at Autonomous Mobile Robots (AMR) as a reference. These autonomous systems perform obstacle detection and obstacle avoidance (ODOA) to seamlessly navigate their environment. Many of them require FOVs in excess of 180 degrees. This ultra-wide FOV is achieved by using multi-camera systems.

For example, imagine that the camera and the object are fixed at a working distance of 30cm. In this case, the HFOV and VFOV are measured manually using a scale (in mm) as shown below:

Optical filterdesign

FOV also depends on the distance between the camera and the object. As discussed earlier, if the objects are closer to the camera, the FOV becomes wider. This is because shorter focal lengths require shorter working distances for proper focusing. Thus, the lens to sensor distance has to be designed based on the working distance.

Optical Filterprice

Meanwhile, you could check out the article What are the crucial factors to consider while integrating multi-camera solutions? if you are interested in learning more about multi-camera integration.

Each embedded vision application has different sensor size requirements to get the best output. A small sensor will have a narrow field of view while a large sensor can provide a wide field of view.

And one of the most popular among those solutions is e-CAM130A_CUXVR_3H02R1 180° FOV camera – a synchronized multi-camera solution that can be directly interfaced with the NVIDIA® Jetson AGX Xavier™ development kit. This camera solution comprises of three 13 MP camera modules that are based on the 1/3.2″ AR1335 CMOS image sensor from onsemi®. These 4K camera modules are positioned inwards to create a 180° FOV as shown in the image below:

Our custom optical filters are meticulously designed to meet the specific needs of your high-performance applications. Using cutting-edge deposition techniques and advanced in-house control software, we ensure every filter delivers exceptional precision and reliability. Whether you’re aiming for enhanced clarity, durability, or optimized performance, our filters are engineered to elevate your project. Get in touch to see how we can assist your project.

Field of view (FOV) is the maximum area of a scene that a camera can focus on/capture. It is represented in degrees. There are three ways to measure the field of view of a camera – horizontally, vertically, or diagonally as shown below.

Smart traffic systems utilize cameras with a wide FOV to seamlessly monitor and manage road traffic. Such cameras capture comprehensive views of large areas for performing real-time traffic flow analysis and incident detection. Covering wide road sections also means they can promptly identify traffic violations, accidents, and congestion. Additionally, the broader view empowers advanced features like vehicle counting, object classification and lane discipline monitoring. This provides crucial real-time data that helps optimize traffic flow and improve overall safety.

Broad perspectives generally equip Autonomous Mobile Robots to navigate complex environments and avoid obstacles. A wide FOV also ensures that robots can detect and analyze their surroundings in real time, boosting their ability to move safely and operate in dynamic environments, such as warehouses, manufacturing floors, and public spaces. A large vertical FOV ensures that obstacles at any height are detected, allowing robots to navigate under hanging obstacles such as shelves or overhead conveyor. For warehouse AMRs, two cameras placed on opposing corners, each providing a 270° FOV, can offer complete situational awareness. This setup enables the AMR to navigate freely in all directions—left, right, forward, and backward—while also turning efficiently without worrying about blind spots or objects coming from behind.

Now let us discuss FOV calculation. In many applications, the required distance from an object and the desired field of view (which determine the size of the object seen in the frame) are known quantities. This information can be used to directly determine the required angular field of view (AFOV) as shown below.

FOV is one of the most critical parameters considered while integrating a camera into an embedded vision system. Whether it’s an intelligent transportation system, autonomous mobile robot, remote patient monitoring system, or automated sports broadcasting device, FOV plays a major role in ensuring the necessary details of the scene are captured. The FOV of the lens can be set as wide or narrow based on the end application requirements.

Similarly, for the calculation of VFOV and DFOV, instead of width (or horizontal F0V), corresponding height and diagonal dimensions of the object are substituted in the above formula respectively.

Also, let us consider a popular embedded vision application like autonomous mobile robots (AMR). These autonomous systems perform obstacle detection and obstacle avoidance (ODOA) to seamlessly navigate their environment. And many of these robots require FOVs in excess of 180 degrees. This ultra-wide FOV is achieved by using multi-camera systems.

Generally for a sensor, FOV refers to the diagonal measurement – which is called DFOV or Diagonal FOV. Horizontal FOV (HFOV) and Vertical FOV (VFOV) will vary based on the aspect ratio of the image sensor used.

Opticalfilters PDF

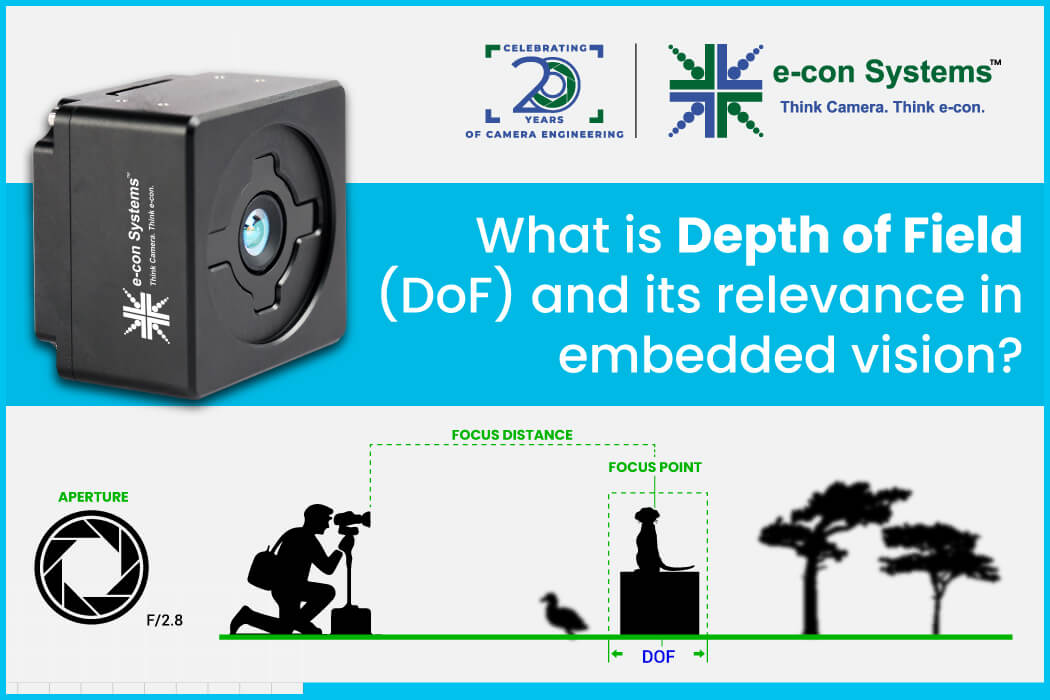

Most of the embedded camera applications require the FOV to be wider enough to cover a large viewing area. For instance, a fish-eye lens is characterized by wider FOV and larger depth of field (DOF) and hence is suitable for surveillance applications. On the other hand, for a zoom/telescopic application, you might require a normal/narrow FOV.

Conversely, if you know the FOV and the working distance, then you can calculate the dimension of the object using the below formula.

However, it is extremely important to understand that many factors determine this. There is no ‘one-size fits all’ approach to this. It is recommended that you seek help from an imaging expert like e-con Systems as you pick the right field of view and lens for your application.

e-con Systems has 20+ years of experience designing, developing, and manufacturing OEM cameras. That’s why we understand the nuances involved in selecting lenses with the right FOV for your application. We can expertly guide you through the entire process of selecting the lens rather than merely acting as a camera supplier.

What areopticalfilters used for

However, selecting and evaluating sensors and lenses can be challenging. The right combination can help build a highly optimized embedded vision system that meets all your standards. Of course, when selecting a lens for an embedded camera, numerous factors, such as Field Of View (FOV), must be considered.

Having two or more cameras enables a higher resolution, prevents lens distortion, and offers a wider FOV. To achieve high imaging quality in multi-camera systems, a lens having an FOV of around 60-70 degrees is usually chosen.

What helps e-con stand out when it comes to this solution is our proprietary 180-degree stitching algorithm that can process images from multiple cameras to create a 180 degree image. To learn more about this solution, please visit the product page.

Since the 1970’s Delta Optical Thin Film has worked closely with our customers to improve their competitiveness by designing and manufacturing optical filter solutions fitted exactly to the customers specifications and price expectation. We offer both Homogeneous Bandpass, Edge and Blocking Filters, Dichroic Beam splitters as well as Linear Variable Filters. With our unique and advanced design software and our proprietary deposition control software, we ensure a fast and efficient design process and a rapid prototype manufacturing process. We meet our customers on specifications, timeline and their budget.

Most embedded camera applications require the FOV to be wider enough to cover a large viewing area. For instance, a fish-eye lens is characterized by a wider FOV and larger depth of field (DOF) and is hence suitable for surveillance applications. On the other hand, for a zoom/telescopic application, you might require a normal/narrow FOV.

Our Linear Variable Filters offer precise, tunable solutions where optical properties shift linearly across the filter, ideal for applications like fluorescence spectroscopy and hyperspectral imaging. Customizable and versatile, they provide exceptional performance across a broad spectral range.

“I speak a hand-full of languages to varying extends – but proficiency in the "language of physics" is even more required in my communication with customers and suppliers around the world."

Our Multi-Bandpass Filters transmit multiple wavelength bands while blocking others, making them ideal for applications like fluorescence spectroscopy and microscopy. Fully customizable, they offer either homogeneous performance or variable filtering across one dimension. With decades of experience, Delta Optical Thin Film specializes in tailored solutions for wavelengths ranging from 300 nm to 1200 nm.

Focal length is the defining property of a lens. Simply put, it is the distance between the lens and the plane of the sensor, and is determined when the lens focuses the object at infinity. It is represented in mm. Focal length depends on the curvature of the lens and its material. The shorter the focal length, the wider the AFOV and vice versa. Please have a look at the below image to understand this better:

Ms.Cici

Ms.Cici

8618319014500

8618319014500