HC PL APO 100x/1,40 OIL STED WHITE - numerical aperture of 100x objective lens

Field ofview camera

Meanwhile, you could check out the article What are the crucial factors to consider while integrating multi-camera solutions? if you are interested in learning more about multi-camera integration.

ToF sensors are also becoming essential for other applications beyond photography, such as gesture recognition and augmented reality (AR). For example, phones like the Samsung Galaxy S20 Ultra and Huawei P30 Pro use these sensors to map out 3D depth in real-time, improving both photography and interactive experiences.

Human eyefield ofview in mm

In some cases, stereo cameras are integrated with ToF sensors, combining the strengths of both devices to capture depth information quickly and with high precision. The combination of a ToF sensor's real-time distance measurements with a stereo camera's detailed depth perception makes it ideal for applications like autonomous vehicles and consumer electronics, where both speed and accuracy are vital.

However, it is extremely important to understand that many factors determine this. There is no ‘one-size fits all’ approach to this. It is recommended that you seek help from an imaging expert like e-con Systems as you pick the right field of view and lens for your application.

Multispectral cameras are specialized devices that can record multiple wavelengths of the light spectrum, including ultraviolet and infrared, in a single shot. Multispectral imaging provides valuable detailed data that traditional cameras cannot capture. Similar to hyperspectral cameras, which capture even more narrow and continuous bands of light, multispectral cameras are used in fields like agriculture, geology, environmental monitoring, and medical imaging. For example, in healthcare, multispectral cameras can help visualize different tissues by capturing images across multiple wavelengths.

What helps e-con stand out when it comes to this solution is our proprietary 180-degree stitching algorithm that can process images from multiple cameras to create a 180 degree image. To learn more about this solution, please visit the product page.

Field ofview synonym

Most of the embedded camera applications require the FOV to be wider enough to cover a large viewing area. For instance, a fish-eye lens is characterized by wider FOV and larger depth of field (DOF) and hence is suitable for surveillance applications. On the other hand, for a zoom/telescopic application, you might require a normal/narrow FOV.

Similarly, for the calculation of VFOV and DFOV, instead of width (or horizontal F0V), corresponding height and diagonal dimensions of the object are substituted in the above formula respectively.

Field of viewsvs fov

Please write to us at camerasolutions@e-consystems.com if you need expert help integrating cameras with different FOVs into your applications.

Field of view (FOV) is the maximum area of a scene that a camera can focus on/capture. It is represented in degrees. There are three ways to measure the field of view of a camera – horizontally, vertically, or diagonally as shown below.

Virtual try-ons give customers a 3D view of how an outfit would fit, and some systems can even mimic how the fabric would move for a more realistic experience. Computer vision and RGB-D cameras make it possible for customers to skip the fitting room and try on clothes instantly. It saves time, makes comparing styles and sizes easier, and improves the overall shopping experience.Â

Industrial automation systems for functions like quality inspection rely on cameras with accurate FOV settings to scrutinize products on assembly lines. They capture imaging data required for thorough product inspection by detecting defects instantly. Moreover, manufacturers can optimize their inspection processes, reduce errors, and maintain consistent product standards.

Smart traffic systems utilize cameras with a wide FOV to seamlessly monitor and manage road traffic. Such cameras capture comprehensive views of large areas for performing real-time traffic flow analysis and incident detection. Covering wide road sections also means they can promptly identify traffic violations, accidents, and congestion. Additionally, the broader view empowers advanced features like vehicle counting, object classification and lane discipline monitoring. This provides crucial real-time data that helps optimize traffic flow and improve overall safety.

Thermal cameras, as the name suggests, are widely used for heat detection in various applications, including manufacturing industries and automobile factories. These cameras measure temperature and can be used to alert users when they detect critical levels of heat that are either too high or too low. By detecting infrared radiation, which is invisible to the human eye, they provide precise temperature readings. Often referred to as infrared cameras, their uses also extend beyond industrial settings. For instance, thermal cameras are also used in agriculture to monitor livestock health, in building inspections to identify heat leaks, and in firefighting to locate hotspots.

e-con Systems has 20+ years of experience designing, developing, and manufacturing OEM cameras. That’s why we understand the nuances involved in selecting lenses with the right FOV for your application. We can expertly guide you through the entire process of selecting the lens rather than merely acting as a camera supplier.

RGB (red, green, and blue) cameras are commonly used in computer vision applications. They capture images in the visible spectrum within wavelengths from 400 to 700 nanometers (nm). Since these images are similar to how humans see, RGB cameras are used for many tasks like object detection, instance segmentation, and pose estimation in situations where human-like vision is enough.Â

Now let us discuss FOV calculation. In many applications, the required distance from an object and the desired field of view (which determine the size of the object seen in the frame) are known quantities. This information can be used to directly determine the required angular field of view (AFOV) as shown below.

In certain situations, combining high-speed and slow-motion cameras can help with the detailed analysis of fast and slow-moving objects within the same event. Letâs say, we are analyzing a game of golf. High-speed cameras can measure the speed of a golf ball, while slow-motion cameras can analyze a golfer's swing movements and body control.

However, selecting and evaluating sensors and lenses can be challenging. The right combination can help build a highly optimized embedded vision system that meets all your standards. Of course, when selecting a lens for an embedded camera, numerous factors, such as Field Of View (FOV), must be considered.

From RGB cameras to LiDAR sensors, explore how different types of computer vision cameras are used in various applications across different industries.

Explore more about AI by checking out our GitHub repository. Join our community to connect with other like-minded Vision AI enthusiasts. Learn more about computer vision applications in healthcare and manufacturing on our solution pages.

Field ofview human eye

LiDAR acts like the car's eyes, sending out laser pulses and measuring how long they take to bounce back. These insights help the car calculate distances and identify objects like cars, pedestrians, and traffic signals, providing a 360-degree view for safer driving.

e-con Systems has led from the front when it comes to innovation in embedded vision. And one of our key strengths is the platform side expertise especially on the NVIDIA Jetson series. Leveraging this, e-con has designed many multi-camera solutions that offer an FOV of up to 360 degrees.

Let’s look at Autonomous Mobile Robots (AMR) as a reference. These autonomous systems perform obstacle detection and obstacle avoidance (ODOA) to seamlessly navigate their environment. Many of them require FOVs in excess of 180 degrees. This ultra-wide FOV is achieved by using multi-camera systems.

An interesting application where RGB-D cameras can come in handy is virtual try-ons, a concept that is becoming more popular in retail stores. To put it simply, smart screens integrated with RGB-D cameras and sensors can gather details like a shopperâs height, body shape, and shoulder width. Using this information, the system can digitally overlay clothing onto a live image of the customer. Computer vision tasks, such as instance segmentation and pose estimation, can process the visual data to accurately detect the customerâs body and align the clothing to fit their proportions in real-time.

Machines and electrical systems at manufacturing plants or oil and gas rigs often operate continuously and generate heat as a byproduct. Over time, excessive heat buildup can occur in components such as motors, bearings, or electrical circuits, potentially leading to equipment failure or safety hazards.Â

Thermal cameras can help operators monitor these systems by detecting abnormal temperature spikes early. An overheating motor can be scheduled for maintenance and to prevent costly breakdowns. By integrating thermal imaging into regular inspections, industries can implement predictive maintenance, reduce downtime, extend equipment life, and ensure a safer work environment. Overall, plant performance can be improved, and the risk of unexpected failures can be minimized.

Most embedded camera applications require the FOV to be wider enough to cover a large viewing area. For instance, a fish-eye lens is characterized by a wider FOV and larger depth of field (DOF) and is hence suitable for surveillance applications. On the other hand, for a zoom/telescopic application, you might require a normal/narrow FOV.

Field ofview definition microscope

When it comes to computer vision, cameras serve as the eyes that allow machines to see and interpret the world similarly to how humans do. Choosing the right type of camera is key to the success of different computer vision applications. From standard RGB cameras to advanced LiDAR systems, each type offers unique features suited to specific tasks. By understanding the variety of camera technologies and their uses, developers, and researchers can better optimize computer vision models to tackle complex real-world challenges.

Remote patient monitoring systems rely on cameras with an optimal FOV to provide accurate and complete observations of patients. These cameras ensure that all relevant movements and conditions are captured so that healthcare providers can monitor the health of patients. It leads to timely medical interventions and improved remote patient safety.

Many modern-day embedded vision systems utilize multiple types of lenses and sensors with different feature sets and varying costs. The design of the camera systems integrated with these components plays a huge role in achieving the required image quality.

LiDAR (Light Detection and Ranging) cameras use laser pulses to create 3D maps and detect objects from a distance. They're effective in many conditions like fog, rain, darkness, and high temperatures, though heavy weather such as rain or fog can impact their performance. LiDAR is commonly used in applications like self-driving cars for navigation and obstacle detection.Â

In this article, let’s explore the importance of FOV in embedded vision, the factors that determine FOV, as well as which applications rely on this the most.

Field ofview calculator

And one of the most popular among those solutions is e-CAM130A_CUXVR_3H02R1 180° FOV camera – a synchronized multi-camera solution that can be directly interfaced with the NVIDIA® Jetson AGX Xavier™ development kit. This camera solution comprises of three 13 MP camera modules that are based on the 1/3.2″ AR1335 CMOS image sensor from onsemi®. These 4K camera modules are positioned inwards to create a 180° FOV as shown in the image below:

Generally for a sensor, FOV refers to the diagonal measurement – which is called DFOV or Diagonal FOV. Horizontal FOV (HFOV) and Vertical FOV (VFOV) will vary based on the aspect ratio of the image sensor used.

Also, let us consider a popular embedded vision application like autonomous mobile robots (AMR). These autonomous systems perform obstacle detection and obstacle avoidance (ODOA) to seamlessly navigate their environment. And many of these robots require FOVs in excess of 180 degrees. This ultra-wide FOV is achieved by using multi-camera systems.

For example, imagine that the camera and the object are fixed at a working distance of 30cm. In this case, the HFOV and VFOV are measured manually using a scale (in mm) as shown below:

Prabu is the Chief Technology Officer and Head of Camera Products at e-con Systems, and comes with a rich experience of more than 15 years in the embedded vision space. He brings to the table a deep knowledge in USB cameras, embedded vision cameras, vision algorithms and FPGAs. He has built 50+ camera solutions spanning various domains such as medical, industrial, agriculture, retail, biometrics, and more. He also comes with expertise in device driver development and BSP development. Currently, Prabu’s focus is to build smart camera solutions that power new age AI based applications.

Focal length is the defining property of a lens. Simply put, it is the distance between the lens and the plane of the sensor, and is determined when the lens focuses the object at infinity. It is represented in mm. Focal length depends on the curvature of the lens and its material. The shorter the focal length, the wider the AFOV and vice versa. Please have a look at the below image to understand this better:

Stereo cameras are a type of camera that uses multiple image sensors to capture depth by comparing images from different angles. They are more accurate than single-sensor systems. Meanwhile, Time-of-Flight (ToF) cameras or sensors measure distances by emitting infrared light that bounces off objects and returns to the sensor. The time it takes for the light to return is calculated by the camera's processor to determine the distance.Â

FOV is one of the most critical parameters considered while integrating a camera into an embedded vision system. Whether it’s an intelligent transportation system, autonomous mobile robot, remote patient monitoring system, or automated sports broadcasting device, FOV plays a major role in ensuring the necessary details of the scene are captured. The FOV of the lens can be set as wide or narrow based on the end application requirements.

Many technical factors, such as data, algorithms, and computing power, contribute to the success of an artificial intelligence (AI) application. Specifically in computer vision, a subfield of AI that focuses on enabling machines to analyze and understand images and videos, one of the most critical factors is the input or data source: the camera. The quality and type of cameras used for a computer vision application directly affect the performance of AI models.

Automated sports broadcasting systems use cameras with a wide FOV to cover entire fields or courts. Hence, all the movements within the sporting area are captured, which means viewers can experience the game in an immersive way. A wide FOV is also important for capturing aspects such as player movements and strategic plays, which enhances the overall broadcasting quality. Furthermore, wider FOV cameras streamline production by potentially replacing multiple conventional cameras, reducing setup complexity and personnel needs.

Broad perspectives generally equip Autonomous Mobile Robots to navigate complex environments and avoid obstacles. A wide FOV also ensures that robots can detect and analyze their surroundings in real time, boosting their ability to move safely and operate in dynamic environments, such as warehouses, manufacturing floors, and public spaces. A large vertical FOV ensures that obstacles at any height are detected, allowing robots to navigate under hanging obstacles such as shelves or overhead conveyor. For warehouse AMRs, two cameras placed on opposing corners, each providing a 270° FOV, can offer complete situational awareness. This setup enables the AMR to navigate freely in all directions—left, right, forward, and backward—while also turning efficiently without worrying about blind spots or objects coming from behind.

On the other hand, slow-motion cameras can be used to capture footage at high frame rates and then reduce the playback speed. This enables viewers to observe details often missed in real-time. These cameras are used to evaluate the performance of firearms and explosive materials. The ability to slow down and analyze intricate movements is ideal for this type of application.

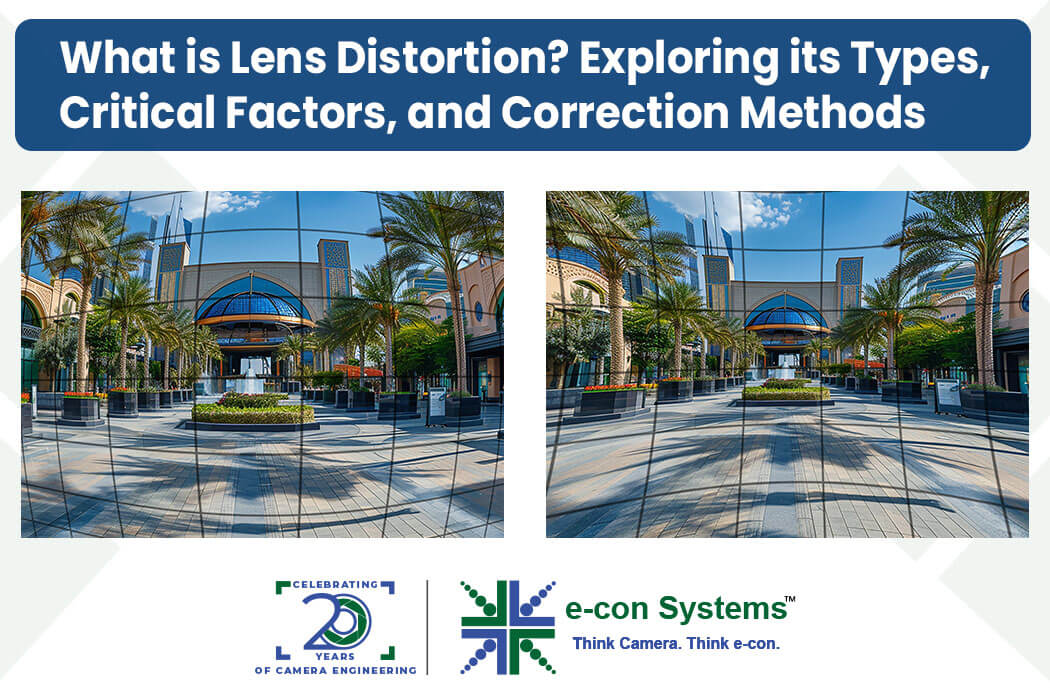

Having 2 or more cameras enables a higher resolution, prevents lens distortion, and offers a wider FOV. To achieve high imaging quality in multi-camera systems, a lens having an FOV of around 60-70 degrees is usually chosen. But it is important to note that this is determined by a multitude of factors. There is no ‘one-size fits all’ approach to this. It is recommended to take the help from an imaging expert like e-con Systems as you go about picking the right field of view and lens for your application. Please feel free to write to us at camerasolutions@e-consystems.com if you need a helping hand.

FOV also depends on the distance between the camera and the object. As discussed earlier, if the objects are closer to the camera, the FOV becomes wider. This is because shorter focal lengths require shorter working distances for proper focusing. Thus, the lens to sensor distance has to be designed based on the working distance.

Having two or more cameras enables a higher resolution, prevents lens distortion, and offers a wider FOV. To achieve high imaging quality in multi-camera systems, a lens having an FOV of around 60-70 degrees is usually chosen.

Similarly, drones equipped with multispectral imaging are making significant strides in agriculture. They can identify unhealthy plants or those affected by insects and pests at an early stage. These cameras can analyze the near-infrared spectrum, and healthy plants generally reflect more near-infrared light than their unhealthy counterparts. By adopting such AI techniques in agriculture, farmers can implement countermeasures early to boost yield and reduce crop loss.

Field ofview Formula

Choosing the right camera is crucial because different computer vision tasks require different types of visual data. For instance, high-resolution cameras are used for applications like facial recognition, where fine facial details must be captured with precision. In contrast, lower-resolution cameras can be used for tasks like queue monitoring that depend on broader patterns more than intricate details.

Nowadays, there are many types of cameras available, each designed to meet specific needs. Understanding their differences can help you optimize your computer vision innovations. Letâs explore the various types of computer vision cameras and their applications across different industries.

Each embedded vision application has different sensor size requirements to get the best output. A small sensor will have a narrow field of view while a large sensor can provide a wide field of view.

Itâs possible that you may have used a Time-of-Flight (ToF) camera without even realizing it. In fact, popular smartphones from brands like Samsung, Huawei, and Realme often include ToF sensors to enhance depth-sensing capabilities. The precise depth information these cameras provide is used to create the popular bokeh effect, where the background is blurred while the subject remains in sharp focus.

You also have an option to capture the same field of view with sensors of different size. This can be done using a lens with the appropriate focal length. As a result, the same FOV can be achieved using a small sensor with a short focal length lens and a large sensor with a long focal length lens.

These tasks usually involve identifying and detecting objects from a two-dimensional (2D) perspective, where capturing depth isnât necessary for accurate results. However, when an application requires depth information, like in 3D object detection or robotics, RGB-D (Red, Green, Blue, and Depth) cameras are used. These cameras combine RGB data with depth sensors to capture 3D details and provide real-time depth measurements.

High-speed cameras are designed to capture more than 10,000 frames per second (FPS) so that they can process rapid movements with exceptional accuracy. For example, when products move quickly on a production line, high-speed cameras can be used to monitor them and detect any abnormalities.

Conversely, if you know the FOV and the working distance, then you can calculate the dimension of the object using the below formula.

From the previous section, we understood the definition of FOV and its relation with several other lens parameters. Let us now discuss how to choose the right FOV for an embedded vision application.

Ms.Cici

Ms.Cici

8618319014500

8618319014500