Bright Light Medical Imaging - bright lights imaging

No licenses or payments are required for a user to stream or distribute audio in AAC format.[51] This reason alone might have made AAC a more attractive format to distribute audio than its predecessor MP3, particularly for streaming audio (such as Internet radio) depending on the use case.

AAC encoders can switch dynamically between a single MDCT block of length 1024 points or 8 blocks of 128 points (or between 960 points and 120 points, respectively).

On May 29, 2007, Apple began selling songs and music videos from participating record labels at higher bitrate (256 kbit/s cVBR) and free of DRM, a format dubbed "iTunes Plus" . These files mostly adhere to the AAC standard and are playable on many non-Apple products but they do include custom iTunes information such as album artwork and a purchase receipt, so as to identify the customer in case the file is leaked out onto peer-to-peer networks. It is possible, however, to remove these custom tags to restore interoperability with players that conform strictly to the AAC specification. As of January 6, 2009, nearly all music on the USA regioned iTunes Store became DRM-free, with the remainder becoming DRM-free by the end of March 2009.[65]

AutoStereoof Riverside

Applying error protection enables error correction up to a certain extent. Error correcting codes are usually applied equally to the whole payload. However, since different parts of an AAC payload show different sensitivity to transmission errors, this would not be a very efficient approach.

AAC takes a modular approach to encoding. Depending on the complexity of the bitstream to be encoded, the desired performance and the acceptable output, implementers may create profiles to define which of a specific set of tools they want to use for a particular application.

TRUE has over 30-years' experience in delivering high quality water infrastructure projects throughout British Columbia. Some of TRUE's services related to ...

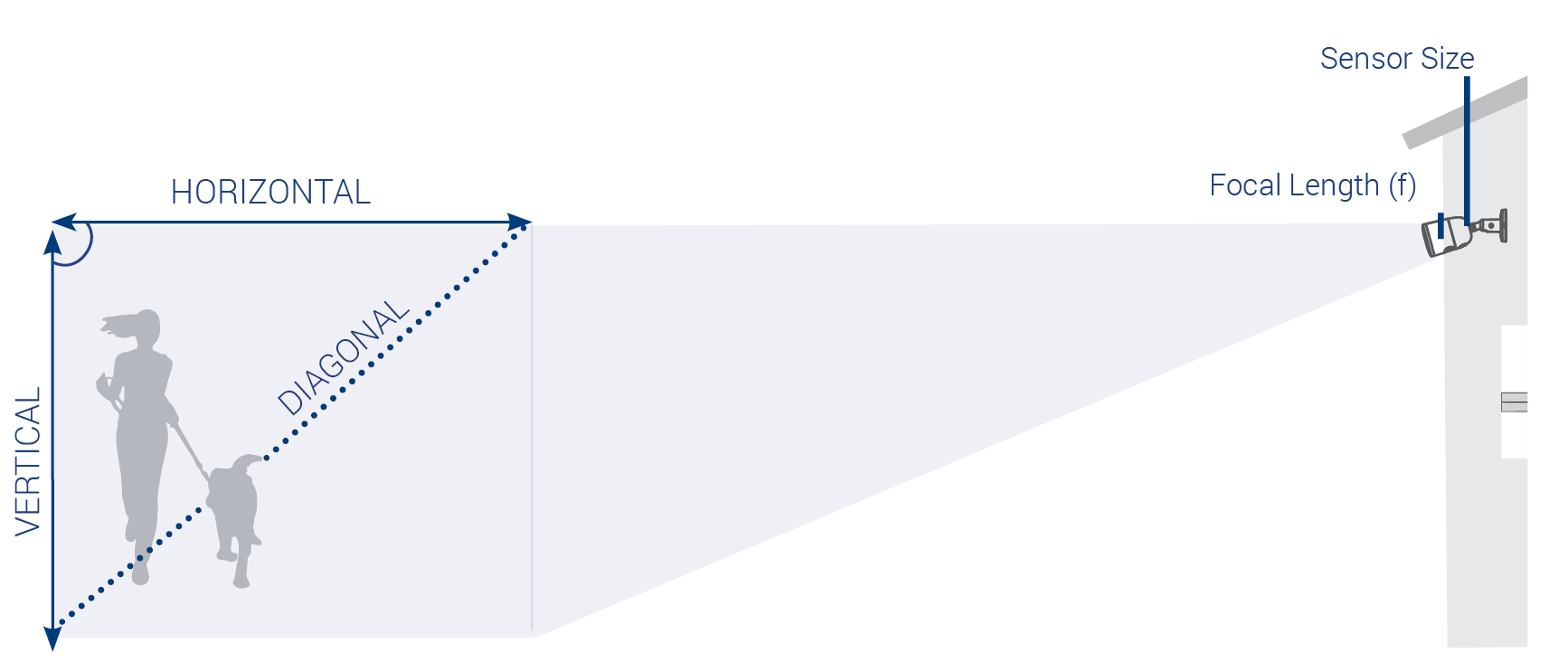

To calculate the FOV requires the sensor size and the focal length of the lens: h = Sensor Size F = Focal Length of the Lens

Pacificstereoriverside

AAC is the default or standard audio format for iPhone, iPod, iPad, Nintendo DSi, Nintendo 3DS, Apple Music,[a] iTunes, DivX Plus Web Player, PlayStation 4 and various Nokia Series 40 phones. It is supported on a wide range of devices and software such as PlayStation Vita, Wii, digital audio players like Sony Walkman or SanDisk Clip, Android and BlackBerry devices, various in-dash car audio systems,[when?][vague] and is also one of the audio formats used on the Spotify web player.[8]

AAC is a wideband audio coding algorithm that exploits two primary coding strategies to dramatically reduce the amount of data needed to represent high-quality digital audio:

Advanced Audio Coding (AAC) is an audio coding standard for lossy digital audio compression. It was designed to be the successor of the MP3 format and generally achieves higher sound quality than MP3 at the same bit rate.[4]

One of many improvements in MPEG-4 Audio is an Object Type called Long Term Prediction (LTP), which is an improvement of the Main profile using a forward predictor with lower computational complexity.[29]

Vivaldi · User Manual - Vivaldi DAC · User Manual - Vivaldi Upsampler · User Manual - Vivaldi Transport · User Manual - Vivaldi Clock · User Manual - Vivaldi ...

The MPEG-4 Audio Version 2 (ISO/IEC 14496-3:1999/Amd 1:2000) defined new audio object types: the low delay AAC (AAC-LD) object type, bit-sliced arithmetic coding (BSAC) object type, parametric audio coding using harmonic and individual line plus noise and error resilient (ER) versions of object types.[30][31][32] It also defined four new audio profiles: High Quality Audio Profile, Low Delay Audio Profile, Natural Audio Profile and Mobile Audio Internetworking Profile.[33]

Pacific Audio lakewood

AdvancedCarStereoreviews

The discrete cosine transform (DCT), a type of transform coding for lossy compression, was proposed by Nasir Ahmed in 1972, and developed by Ahmed with T. Natarajan and K. R. Rao in 1973, publishing their results in 1974.[9][10][11] This led to the development of the modified discrete cosine transform (MDCT), proposed by J. P. Princen, A. W. Johnson and A. B. Bradley in 1987,[12] following earlier work by Princen and Bradley in 1986.[13] The MP3 audio coding standard introduced in 1992 used a hybrid coding algorithm that is part MDCT and part FFT.[14] AAC uses a purely MDCT algorithm, giving it higher compression efficiency than MP3.[4] Development further advanced when Lars Liljeryd introduced a method that radically shrank the amount of information needed to store the digitized form of a song or speech.[15]

iTunes offers a "Variable Bit Rate" encoding option which encodes AAC tracks in the Constrained Variable Bitrate scheme (a less strict variant of ABR encoding); the underlying QuickTime API does offer a true VBR encoding profile however.[66]

The AAC patent holders include Bell Labs, Dolby, ETRI, Fraunhofer, JVC Kenwood, LG Electronics, Microsoft, NEC, NTT (and its subsidiary NTT Docomo), Panasonic, Philips, and Sony Corporation.[16][1] Based on the list of patents from the SEC terms, the last baseline AAC patent expires in 2028, and the last patent for all AAC extensions mentioned expires in 2031.[57]

Almost all current computer media players include built-in decoders for AAC, or can utilize a library to decode it. On Microsoft Windows, DirectShow can be used this way with the corresponding filters to enable AAC playback in any DirectShow based player. Mac OS X supports AAC via the QuickTime libraries.

The Solo10G features a 40Gbps Thunderbolt interface that delivers up to 2800 MB/s of PCIe bandwidth, and connects to any Thunderbolt 4 or Thunderbolt 3 computer ...

The Rockbox open source firmware (available for multiple portable players) also offers support for AAC to varying degrees, depending on the model of player and the AAC profile.

... team with admin controls, team management, and stringent security. We never train on your data or conversations. An interface representing a list of team ...

20231212 — In Bereichen wie der Bildgebung und Beleuchtung ist inkohärentes Licht eine praktischere Lichtquelle, da sich ihre Lichtwellen in verschiedenen ...

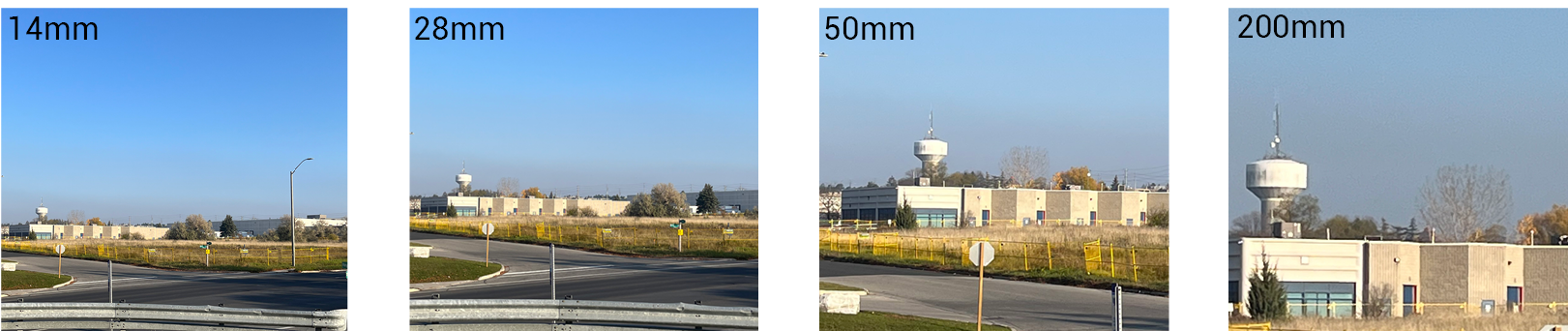

Field of View (FOV) is the maximum observable area that is seen at any given moment through an optical device such as a camera lens. The coverage of the area can be measured using the horizontal and vertical distances to find the diagonal of the area in degrees. Mathematically, the FOV is calculated using the horizontal dimension of the sensor (h) and the Focal Length (F). You can find the camera’s Field of View details in the Specifications sheet. Click here for additional support content including security camera documentation. The Camera Lens, Sensor and Focal Length Focal length (F) is the distance between the center of a lens and its sensor. The size of the lens is the aperture size. These factors affect field of view. A narrower focal length captures more of the scene and displays a larger field of view. A wider focal length magnifies a scene and decreases the field of view. The higher the focal length value the lower the FOV. Types of Camera Lenses Fixed: a fixed camera focal length provides an Angular FOV which is permanently set and cannot be adjusted by the user. Varifocal: the camera focal length can be manually adjusted by the user. Generally, this is done manually with screws or dials. At Lorex we have motorized varifocal cameras that allow you to digitally zoom using your phone or NVR without losing details. These lenses provide flexibility and customization for your camera image needs. The importance of Field of View A wide angle lens or smaller lenses produce a greater field of view and captures more objects in a scene enhancing your ability to cover larger and wider areas such as foyers, parking lots or warehouses. A narrow angle lens or larger lenses produce a smaller field of view; capturing a limited area, but the camera quality image improves in detail. These are designed to monitor a specific object, such as cash registers, entrances/exits, hallways or objects of value. How to Calculate the FOV To calculate the FOV requires the sensor size and the focal length of the lens: h = Sensor Size F = Focal Length of the Lens FOV is represented by this equation: FOV = 2tan-1(h) / 2F Example: h = 4.7mm F = 6mm FOV = 2tan-1(h) / 2F = 2tan-1(4.7)(12) = 2tan-1(0.39) = 2(21.4°) = 42.8°

These factors affect field of view. A narrower focal length captures more of the scene and displays a larger field of view. A wider focal length magnifies a scene and decreases the field of view. The higher the focal length value the lower the FOV.

AAC has been standardized by ISO and IEC as part of the MPEG-2 and MPEG-4 specifications.[5][6] Part of AAC, HE-AAC ("AAC+"), is part of MPEG-4 Audio and is adopted into digital radio standards DAB+ and Digital Radio Mondiale, and mobile television standards DVB-H and ATSC-M/H.

The MPEG-4 Part 3 standard (MPEG-4 Audio) defined various new compression tools (a.k.a. Audio Object Types) and their usage in brand new profiles. AAC is not used in some of the MPEG-4 Audio profiles. The MPEG-2 Part 7 AAC LC profile, AAC Main profile and AAC SSR profile are combined with Perceptual Noise Substitution and defined in the MPEG-4 Audio standard as Audio Object Types (under the name AAC LC, AAC Main and AAC SSR). These are combined with other Object Types in MPEG-4 Audio profiles.[27] Here is a list of some audio profiles defined in the MPEG-4 standard:[35][45]

Field of View (FOV) is the maximum observable area that is seen at any given moment through an optical device such as a camera lens. The coverage of the area can be measured using the horizontal and vertical distances to find the diagonal of the area in degrees. Mathematically, the FOV is calculated using the horizontal dimension of the sensor (h) and the Focal Length (F).

In 1997, AAC was first introduced as MPEG-2 Part 7, formally known as ISO/IEC 13818-7:1997. This part of MPEG-2 was a new part, since MPEG-2 already included MPEG-2 Part 3, formally known as ISO/IEC 13818-3: MPEG-2 BC (Backwards Compatible).[18][19] Therefore, MPEG-2 Part 7 is also known as MPEG-2 NBC (Non-Backward Compatible), because it is not compatible with the MPEG-1 audio formats (MP1, MP2 and MP3).[18][20][21][22]

MPEG-2 Part 7 defined three profiles: Low-Complexity profile (AAC-LC / LC-AAC), Main profile (AAC Main) and Scalable Sampling Rate profile (AAC-SSR). AAC-LC profile consists of a base format very much like AT&T's Perceptual Audio Coding (PAC) coding format,[23][24][25] with the addition of temporal noise shaping (TNS),[26] the Kaiser window (described below), a nonuniform quantizer, and a reworking of the bitstream format to handle up to 16 stereo channels, 16 mono channels, 16 low-frequency effect (LFE) channels and 16 commentary channels in one bitstream. The Main profile adds a set of recursive predictors that are calculated on each tap of the filterbank. The SSR uses a 4-band PQMF filterbank, with four shorter filterbanks following, in order to allow for scalable sampling rates.

Gamers know what it takes to score high in action and shooting games. These watches recreate the exciting, ever-changing game world with polarised paint in ...

The HE-AAC Profile (AAC LC with SBR) and AAC Profile (AAC LC) were first standardized in ISO/IEC 14496-3:2001/Amd 1:2003.[34] The HE-AAC v2 Profile (AAC LC with SBR and Parametric Stereo) was first specified in ISO/IEC 14496-3:2005/Amd 2:2006.[35][36][37] The Parametric Stereo audio object type used in HE-AAC v2 was first defined in ISO/IEC 14496-3:2001/Amd 2:2004.[38][39][40]

The ETSI, the standards governing body for the DVB suite, supports AAC, HE-AAC and HE-AAC v2 audio coding in DVB applications since at least 2004.[64] DVB broadcasts which use the H.264 compression for video normally use HE-AAC for audio.[citation needed]

The MPEG-4 Part 3 standard also contains other ways of compressing sound. These include lossless compression formats, synthetic audio and low bit-rate compression formats generally used for speech.

In May 2006, Nero AG released an AAC encoding tool free of charge, Nero Digital Audio (the AAC codec portion has become Nero AAC Codec),[75] which is capable of encoding LC-AAC, HE-AAC and HE-AAC v2 streams. The tool is a command-line interface tool only. A separate utility is also included to decode to PCM WAV.

The MPEG-4 audio standard does not define a single or small set of highly efficient compression schemes but rather a complex toolbox to perform a wide range of operations from low bit rate speech coding to high-quality audio coding and music synthesis.

The audio coding standards MPEG-4 Low Delay (AAC-LD), Enhanced Low Delay (AAC-ELD), and Enhanced Low Delay v2 (AAC-ELDv2) as defined in ISO/IEC 14496-3:2009 and ISO/IEC 14496-3:2009/Amd 3 are designed to combine the advantages of perceptual audio coding with the low delay necessary for two-way communication. They are closely derived from the MPEG-2 Advanced Audio Coding (AAC) format.[47][48][49] AAC-ELD is recommended by GSMA as super-wideband voice codec in the IMS Profile for High Definition Video Conference (HDVC) Service.[50]

It used to be common for free and open source software implementations such as FFmpeg and FAAC to only distribute in source code form so as to not "otherwise supply" an AAC codec. However, FFmpeg has since become more lenient on patent matters: the "gyan.dev" builds recommended by the official site now contain its AAC codec, with the FFmpeg legal page stating that patent law conformance is the user's responsibility.[54] (See below under Products that support AAC, Software.) The Fedora Project, a community backed by Red Hat, has imported the "Third-Party Modified Version of the Fraunhofer FDK AAC Codec Library for Android" to its repositories on September 25, 2018,[55] and has enabled FFmpeg's native AAC encoder and decoder for its ffmpeg-free package on January 31, 2023.[56]

However, a patent license is required for all manufacturers or developers of AAC "end-user" codecs.[52] The terms (as disclosed to SEC) uses per-unit pricing. In the case of software, each computer running the software is to be considered a separate "unit".[53]

Focal length (F) is the distance between the center of a lens and its sensor. The size of the lens is the aperture size.

The native AAC encoder created in FFmpeg's libavcodec, and forked with Libav, was considered experimental and poor. A significant amount of work was done for the 3.0 release of FFmpeg (February 2016) to make its version usable and competitive with the rest of the AAC encoders.[78] Libav has not merged this work and continues to use the older version of the AAC encoder. These encoders are LGPL-licensed open-source and can be built for any platform that the FFmpeg or Libav frameworks can be built.

Adobe Flash Player, since version 9 update 3, can also play back AAC streams.[72][73] Since Flash Player is also a browser plugin, it can play AAC files through a browser as well.

AAC supports inclusion of 48 full-bandwidth (up to 96 kHz) audio channels in one stream plus 16 low frequency effects (LFE, limited to 120 Hz) channels, up to 16 "coupling" or dialog channels, and up to 16 data streams. The quality for stereo is satisfactory to modest requirements at 96 kbit/s in joint stereo mode; however, hi-fi transparency demands data rates of at least 128 kbit/s (VBR). Tests[which?] of MPEG-4 audio have shown that AAC meets the requirements referred to as "transparent" for the ITU at 128 kbit/s for stereo, and 384 kbit/s for 5.1 audio.[7] AAC uses only a modified discrete cosine transform (MDCT) algorithm, giving it higher compression efficiency than MP3, which uses a hybrid coding algorithm that is part MDCT and part FFT.[4]

XIBOBA Kendal, Jl. Laut, No.RT 002 – Waze .

FAAC and FAAD2 stand for Freeware Advanced Audio Coder and Decoder 2 respectively. FAAC supports audio object types LC, Main and LTP.[76] FAAD2 supports audio object types LC, Main, LTP, SBR and PS.[77] Although FAAD2 is free software, FAAC is not free software.

Stereoshop near me

Although the native AAC encoder only produces AAC-LC, ffmpeg's native decoder is able to deal with a wide range of input formats.

StereoCity

Optional iPod support (playback of unprotected AAC files) for the Xbox 360 is available as a free download from Xbox Live.[74]

While the MP3 format has near-universal hardware and software support, primarily because MP3 was the format of choice during the crucial first few years of widespread music file-sharing/distribution over the internet, AAC is a strong contender due to some unwavering industry support.[42]

For a number of years, many mobile phones from manufacturers such as Nokia, Motorola, Samsung, Sony Ericsson, BenQ-Siemens and Philips have supported AAC playback. The first such phone was the Nokia 5510 released in 2002 which also plays MP3s. However, this phone was a commercial failure[citation needed] and such phones with integrated music players did not gain mainstream popularity until 2005 when the trend of having AAC as well as MP3 support continued. Most new smartphones and music-themed phones support playback of these formats.

Overall, the AAC format allows developers more flexibility to design codecs than MP3 does, and corrects many of the design choices made in the original MPEG-1 audio specification. This increased flexibility often leads to more concurrent encoding strategies and, as a result, to more efficient compression. This is especially true at very low bit rates where the superior stereo coding, pure MDCT, and better transform window sizes leave MP3 unable to compete.

Both FFmpeg and Libav can use the Fraunhofer FDK AAC library via libfdk-aac, and while the FFmpeg native encoder has become stable and good enough for common use, FDK is still considered the highest quality encoder available for use with FFmpeg.[79] Libav also recommends using FDK AAC if it is available.[80] FFmpeg 4.4 and above can also use the Apple audiotoolbox encoder.[79]

Pacificstereo

AAC was developed with the cooperation and contributions of companies including Bell Labs, Fraunhofer IIS, Dolby Laboratories, LG Electronics, NEC, Panasonic, Sony Corporation,[1] ETRI, JVC Kenwood, Philips, Microsoft, and NTT.[16] It was officially declared an international standard by the Moving Picture Experts Group in April 1997. It is specified both as Part 7 of the MPEG-2 standard, and Subpart 4 in Part 3 of the MPEG-4 standard.[17]

A Rod lens is a special type of cylinder lens, and is highly polished on the circumference and ground on both ends.

Carstereoshop

In December 2007, Brazil started broadcasting terrestrial DTV standard called International ISDB-Tb that implements video coding H.264/AVC with audio AAC-LC on main program (single or multi) and video H.264/AVC with audio HE-AACv2 in the 1seg mobile sub-program.

The MPEG-2 Part 7 standard (Advanced Audio Coding) was first published in 1997 and offers three default profiles:[2][44]

In 1999, MPEG-2 Part 7 was updated and included in the MPEG-4 family of standards and became known as MPEG-4 Part 3, MPEG-4 Audio or ISO/IEC 14496-3:1999. This update included several improvements. One of these improvements was the addition of Audio Object Types which are used to allow interoperability with a diverse range of other audio formats such as TwinVQ, CELP, HVXC, speech synthesis and MPEG-4 Structured Audio. Another notable addition in this version of the AAC standard is Perceptual Noise Substitution (PNS). In that regard, the AAC profiles (AAC-LC, AAC Main and AAC-SSR profiles) are combined with perceptual noise substitution and are defined in the MPEG-4 audio standard as Audio Object Types.[27] MPEG-4 Audio Object Types are combined in four MPEG-4 Audio profiles: Main (which includes most of the MPEG-4 Audio Object Types), Scalable (AAC LC, AAC LTP, CELP, HVXC, TwinVQ, Wavetable Synthesis, TTSI), Speech (CELP, HVXC, TTSI) and Low Rate Synthesis (Wavetable Synthesis, TTSI).[27][28]

A Fraunhofer-authored open-source encoder/decoder included in Android has been ported to other platforms. FFmpeg’s native AAC encoder does not support HE-AAC and HE-AACv2, but GPL 2.0+ of ffmpeg is not compatible with FDK AAC, hence ffmpeg with libfdk-aac is not redistributable. The QAAC encoder that is using Apple's Core Media Audio is still higher quality than FDK.

As of September 2009, Apple has added support for HE-AAC (which is fully part of the MP4 standard) only for radio streams, not file playback, and iTunes still lacks support for true VBR encoding.

In April 2003, Apple brought mainstream attention to AAC by announcing that its iTunes and iPod products would support songs in MPEG-4 AAC format (via a firmware update for older iPods). Customers could download music in a closed-source digital rights management (DRM)-restricted form of 128 kbit/s AAC (see FairPlay) via the iTunes Store or create files without DRM from their own CDs using iTunes. In later years, Apple began offering music videos and movies, which also use AAC for audio encoding.

A narrow angle lens or larger lenses produce a smaller field of view; capturing a limited area, but the camera quality image improves in detail. These are designed to monitor a specific object, such as cash registers, entrances/exits, hallways or objects of value.

A wide angle lens or smaller lenses produce a greater field of view and captures more objects in a scene enhancing your ability to cover larger and wider areas such as foyers, parking lots or warehouses.

Fixed: a fixed camera focal length provides an Angular FOV which is permanently set and cannot be adjusted by the user. Varifocal: the camera focal length can be manually adjusted by the user. Generally, this is done manually with screws or dials. At Lorex we have motorized varifocal cameras that allow you to digitally zoom using your phone or NVR without losing details. These lenses provide flexibility and customization for your camera image needs.

Some of these players (e.g., foobar2000, Winamp, and VLC) also support the decoding of ADTS (Audio Data Transport Stream) using the SHOUTcast protocol. Plug-ins for Winamp and foobar2000 enable the creation of such streams.

You can find the camera’s Field of View details in the Specifications sheet. Click here for additional support content including security camera documentation.

The reference software for MPEG-4 Part 3 is specified in MPEG-4 Part 5 and the conformance bit-streams are specified in MPEG-4 Part 4. MPEG-4 Audio remains backward-compatible with MPEG-2 Part 7.[29]

In 2006, the International Electrotechnical Commission published IEC 62471 Standard for photobiological safety of lamps and lamp systems, and LED lighting ...

Advanced Audio Coding is designed to be the successor of the MPEG-1 Audio Layer 3, known as MP3 format, which was specified by ISO/IEC in 11172-3 (MPEG-1 Audio) and 13818-3 (MPEG-2 Audio).

In addition to the MP4, 3GP and other container formats based on ISO base media file format for file storage, AAC audio data was first packaged in a file for the MPEG-2 standard using Audio Data Interchange Format (ADIF),[62] consisting of a single header followed by the raw AAC audio data blocks.[63] However, if the data is to be streamed within an MPEG-2 transport stream, a self-synchronizing format called an Audio Data Transport Stream (ADTS) is used, consisting of a series of frames, each frame having a header followed by the AAC audio data.[62] This file and streaming-based format are defined in MPEG-2 Part 7, but are only considered informative by MPEG-4, so an MPEG-4 decoder does not need to support either format.[62] These containers, as well as a raw AAC stream, may bear the .aac file extension. MPEG-4 Part 3 also defines its own self-synchronizing format called a Low Overhead Audio Stream (LOAS) that encapsulates not only AAC, but any MPEG-4 audio compression scheme such as TwinVQ and ALS. This format is what was defined for use in DVB transport streams when encoders use either SBR or parametric stereo AAC extensions. However, it is restricted to only a single non-multiplexed AAC stream. This format is also referred to as a Low Overhead Audio Transport Multiplex (LATM), which is just an interleaved multiple stream version of a LOAS.[62]

In December 2003, Japan started broadcasting terrestrial DTV ISDB-T standard that implements MPEG-2 video and MPEG-2 AAC audio. In April 2006 Japan started broadcasting the ISDB-T mobile sub-program, called 1seg, that was the first implementation of video H.264/AVC with audio HE-AAC in Terrestrial HDTV broadcasting service on the planet.

Google Assistant now in even more devices. With Google Assistant in even more devices, it's easy to get things done. Just start with "Hey Google" to quickly get ...

Ms.Cici

Ms.Cici

8618319014500

8618319014500