Is Diffused Light the Same as Soft Light? - define diffused light

Mirrorlesscamera

So what does a “crop frame” mean? Basically, when lenses were first rated and named, they did so based on a “full frame” camera. As a result, a 300mm lens is a true 300mm on a full frame camera. However, because crop frame DSLRs have smaller sensors than the big full frame DSLRs, the lens has to be mounted a little bit differently, effectively giving it a multiplier effect. Without going into too much sciency detail, this means that for most crop frame cameras, you are essentially magnifying your focal length. Thus, for a crop frame with 1.6X effect, your 300mm would actually be a 480mm – allowing you to get much more zoomed into the animal. This is great, right? Mostly it is. However, this also means that on your wide angle end of the spectrum, it’s also multiplied. So a 24mm would actually be closer to a 38mm – becoming less wide and less ideal for landscape shots and the like.

Now that we have assembled our data, we can use the rest of the fastai API as usual. vision_learner works perfectly in this case, and the library will infer the proper loss function from the data:

These cameras are probably responsible for putting many professional photographers out of business due to their relative affordability and superb quality in the hands of dedicated hobbyists and amateurs. In other words, these cameras are great.

In the end, point and shoots are a brilliant category of camera, both for the beginner and seasoned professional alike. What they may lack in high end tech, they make up for in ease of use, flexibility, and adaptability. Many pro photographers will actually carry a small point and shoot with them, along side their big fancy equipment, because of how easy it is to whip out of their pocket and grab that candid shot, or go from shooting a far away city scape one second to a close up of a flower the next.

It seems the segmentation masks have the same base names as the images but with an extra _P, so we can define a label function:

The second thing is what we already mentioned, is that they are usually bigger and heavier than other cameras out there. Since they are usually fabricated with a metal body, this heft is also a benefit as they are generally very durable.

This tutorial highlights on how to quickly build a Learner and fine tune a pretrained model on most computer vision tasks.

For this task, we will use the Pascal Dataset that contains images with different kinds of objects/persons. Itâs orginally a dataset for object detection, meaning the task is not only to detect if there is an instance of one class of an image, but to also draw a bounding box around it. Here we will just try to predict all the classes in one given image.

We will now look at a task where we want to predict points in a picture. For this, we will use the Biwi Kinect Head Pose Dataset. First thing first, letâs begin by downloading the dataset as usual.

As for the single classification predictions, we get three things. The last one is the prediction of the model on each class (going from 0 to 1). The second to last cooresponds to a one-hot encoded targets (you get True for all predicted classes, the ones that get a probability > 0.5) and the first is the decoded, readable version.

We can also use the data block API to get our data in a DataLoaders. Like itâs been said before, feel free to skip this part if you are not comfortable with learning new APIs just yet.

Photography was truly revolutionized by this category of camera, as they are accessible, easy to use, offered in a range of affordable options, and some even come in waterproof or shockproof housings. It truly is amazing what you can get with these workhorses of the camera world.

As with nearly all things, you get what you pay for, and given the reasonable price tags of point and shoot cameras, they come in at an incredibly good value.

Then we can create a Learner, which is a fastai object that combines the data and a model for training, and uses transfer learning to fine tune a pretrained model in just two lines of code:

A traditional CNN wonât work for segmentation, we have to use a special kind of model called a UNet, so we use unet_learner to define our Learner:

Camerabrand

These quick summaries of various camera bodies is in no way exhaustive of their various merits nor shortcomings. As new tech comes out, the line between each will shift and sway and be drawn again many times over. However, for someone that is new to photography or thinking about upgrading or just simply is curious about the options out there, we hope this will be of help.

We used the default learning rate before, but we might want to find the best one possible. For this, we can use the learning rate finder:

May 28, 2024 — SPS Italia is the highlight event for the intelligent, digital and sustainable industry. SPS Italia is the annual appointment to discuss the ...

The predict method returns three things: the decoded prediction (here False for dog), the index of the predicted class and the tensor of probabilities of all classes in the order of their indexed labels(in this case, the model is quite confident about the being that of a dog). This method accepts a filename, a PIL image or a tensor directly in this case. We can also have a look at some predictions with the show_results method:

While it’s difficult to call out “downsides” to these cameras, as they offer so much to a huge audience of photographers, when compared to higher end categories of cameras they often lack quality. Most often this is quality of the resulting image, but it might also be build quality (ie, made of cheaper materials, less durable, etc.).

A camera lens is an optical component that is mounted onto a camera body to capture and focus light onto the camera's image sensor or film.

Note that we donât have to specify the fn_col and the label_col because they default to the first and second column respectively.

Technical Core. Who We Are · Clients · Projects · Factory · Certificates · Careers · Contacts. DELIVERING HIGH QUALITY TO ALL OUR VALUED CLIENTS ...

There are essentially four types or categories of cameras on the market now that consumers have easy access too. Some could argue that film cameras still have a role to play, too, but we will make the argument that these four categories still hold true, with each category coming in a digital or film version. But truthfully, we won’t talk much about film here, as it’s quickly going the way of the dodo.

Multi-label classification defers from before in the sense each image does not belong to one category. An image could have a person and a horse inside it for instance. Or have none of the categories we study.

The second issue, which is becoming a non-issue, is focusing speed. Again, when compared to a point and shoot, the speed will surprise you because it’s very fast. However, some people complain that when compared to their high end DSLR, the focusing speed is slow. This author has experimented with this on newer models of mirrorless cameras, and their focusing speed was impressive – appears to be a resolved issue.

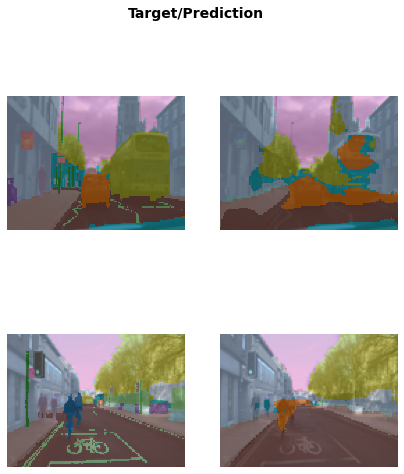

We can also sort the modelâs errors on the validation set using the SegmentationInterpretation class and then plot the instances with the k highest contributions to the validation loss.

Illuminatore parabola in vendita in audio e video: scopri subito migliaia di annunci di privati e aziende e trova quello che cerchi su Subito.it.

We can also use the data block API to get our data in a DataLoaders. Like we said before, feel free to skip this part if you are not comfortable with learning new APIs just yet.

The only other difference to previous data block examples is that the second block is a PointBlock. This is necessary so that fastai knows that the labels represent coordinates; that way, it knows that when doing data augmentation, it should do the same augmentation to these coordinates as it does to the images.

There are 24 directories numbered from 01 to 24 (they correspond to the different persons photographed) and a corresponding .obj file (we wonât need them here). Weâll take a look inside one of these directories:

Omnidirectionalcamera

Today, many photographers are comparing them to higher end DSLRs for image quality. This is a big deal. When you can get the quality of a DSLR at half the weight and a smaller price tag, why wouldn’t you? Well many people are making the switch. However, there are still some issues to be aware of.

It plots the graph of the learning rate finder and gives us two suggestions (minimum divided by 10 and steepest gradient). Letâs use 3e-3 here. We will also do a bit more epochs:

One important point to note is that we should not just use a random splitter. The reason for this is that the same person appears in multiple images in this dataset â but we want to ensure that our model can generalise to people that it hasnât seen yet. Each folder in the dataset contains the images for one person. Therefore, we can create a splitter function which returns true for just one person, resulting in a validation set containing just that personâs images.

The upsides to full-frame cameras are monumental if you prioritize image quality. When you take your first photos with a full-frame, you’ll likely gasp at how crisp the resulting photos are and how much you can crop in on them and still retain top quality. It’s difficult to say whether years of photography will make you a perfectionist, or it’s only the perfectionist that is with photography for years, but those that demand the best will reach for a full frame every time.

As usual, we can have a look at our data with the show_batch method. In this instance, the fastai library is superimposing the masks with one specific color per pixel:

Camera

To label our data with the breed name, we will use a regular expression to extract it from the filename. Looking back at a filename, we have:

Sweeping the camera world by storm, these mighty little powerhouses are solving many long time issues. They have larger sensors and much better computing power than their point and shoot cousins, but don’t carry the extra weight around with them like their big brother DSLRs. In fact, many mirrorless cameras are of similar size and weight to the larger point and shoots, making them a wonderful travel camera. In addition, they give you the ability to change lenses, which further increases optical quality.

The intro SLR camera (Single Lens Reflex) has been setting the bar for many years, originating in the film days and transgressing nicely into the digital age. Companies like Canon and Nikon have poured huge amounts of money for R&D into these and the quality shows. Now, new sensors, processors, and general tech are making their ways into smaller and lighter cameras that are getting more affordable all the time.

SLRcamera

Inside the subdirectories, we have different frames, each of them come with an image (\_rgb.jpg) and a pose file (\_pose.txt). We can easily get all the image files recursively with get_image_files, then write a function that converts an image filename to its associated pose file.

Since itâs pretty common to use regular expressions to label the data (often, labels are hidden in the file names), there is a factory method to do just that:

9" Dimmable Led Circle Light With Tripod Phone Selfie Camera Studio Photo · Kshioe Ring Light, 14'' Dimmable Continuous Circle Lighting Kit Photography Photo ...

Makroobjektive ; NIKKOR Z MC 105mm f/2.8 VR S · (13) · UVP 1.199,-. 849,- ; Sony FE 90mm F/2.8G Macro OSS (SEL90M28G.SYX). (36) · UVP 1.099,-. 879,- ; Canon RF 100 mm ...

Thatâs pretty straightforward: for each filename, we get the different labels (separated by space) and the last column tells if itâs in the validation set or not. To get this in DataLoaders quickly, we have a factory method, from_df. We can specify the underlying path where all the images are, an additional folder to add between the base path and the filenames (here train), the valid_col to consider for the validation set (if we donât specify this, we take a random subset), a label_delim to split the labels and, as before, item_tfms and batch_tfms.

While the verdict is still out on whether a crop frame DSLR or a mirrorless camera is the best body with which to upgrade from a point and shoot, crop frame DSLRs are still a standard as one’s “first good camera”.

Since classifying the exact breed of cats or dogs amongst 37 different breeds is a harder problem, we will slightly change the definition of our DataLoaders to use data augmentation:

We can also use the data block API to get our data in a DataLoaders. This is a bit more advanced, so fell free to skip this part if you are not comfortable with learning new APIâs just yet.

Being the model of what most modern photography is based on, having one of these in your bag means the sky is your limit. You can shoot on extreme time lapses, very high apertures, add on a myriad of accessories and off camera flashes, and put on highly coveted big zoom lenses to get incredible wildlife shots at astonishingly good quality and resolution.

These are the big daddies of the camera world. Both literally and metaphorically. They are without choice much bigger than a point and shoot, and are solid hunks of camera. However, they are also the top tier in the camera world, too, producing the largest, sharpest, and most data rich images out there. They have bigger sensors and come with high brow tech inside their internal computers. Thus, you are going to get huge megapixels out of them. The newest models at the time of this publishing are 50 megapixels plus.

The upsides to these high tech beauties are significant. As already mentioned above, they are a massive quality upgrade from a point and shoot, packing the punch of a DSLR in a small package. They are lightweight, more portable than a DSLR, and their equipment is generally priced a bit lower, too. In addition, they come in both crop frame and full frame versions, which has resulted in many photographers making the switch.

However, the biggest cost is that you lose your multiplying factor for your big lenses. That is, the 300mm that could be a 480mm on your crop frame just got shot back down to earth at 300mm. If you are mostly into landscape or cultural photography this isn’t a big deal, but for wildlife photographers, it is significant. You now either have to carry a bigger lens, a more expensive lens, settle for a lens with a bigger minimum aperture, or all of the above. If you’re ready to make the splurge on a full frame DSLR, be ready to also make a splurge on a new zoom lens.

To summarize, these four categories are as follows: point and shoot, mirrorless (sometimes referred to as 4/3s), crop frame DSLR, and full frame DSLR. For the sake of organization we’ll break each one down into its own section and discuss the ins and outs of them here.

The images folder contains the images, and the corresponding segmentation masks of labels are in the labels folder. The codes file contains the corresponding integer to class (the masks have an int value for each pixel).

The second component of their tech is that they generally have a more basic computer inside of them. Their ability to process colors, judge light and dark, achieve perfect focus quickly, etc., is a bit hampered by less computing power.

To label our data for the cats vs dogs problem, we need to know which filenames are of dog pictures and which ones are of cat pictures. There is an easy way to distinguish: the name of the file begins with a capital for cats, and a lowercased letter for dogs:

Excimer-Laser. dc.subject, partielle Kohärenz. dc.subject, Compaction. dc.subject, 193nm. dc.subject, Shearing Interferometry. dc.subject, Excimer laser. dc.

Training a model is as easy as before: the same functions can be applied and the fastai library will automatically detect that we are in a multi-label problem, thus picking the right loss function. The only difference is in the metric we pass: error_rate will not work for a multi-label problem, but we can use accuracy_thresh and F1ScoreMulti. We can also change the default name for a metric, for instance, we may want to see F1 scores with macro and samples averaging.

The Biwi dataset web site explains the format of the pose text file associated with each image, which shows the location of the center of the head. The details of this arenât important for our purposes, so weâll just show the function we use to extract the head center point:

We will ignore the annotations folder for now, and focus on the images one. get_image_files is a fastai function that helps us grab all the image files (recursively) in one folder.

so the class is everything before the last _ followed by some digits. A regular expression that will catch the name is thus:

To get our data ready for a model, we need to put it in a DataLoaders object. Here we have a function that labels using the file names, so we will use ImageDataLoaders.from_name_func. There are other factory methods of ImageDataLoaders that could be more suitable for your problem, so make sure to check them all in vision.data.

The National Academy of Engineering (NAE) mission is to promote the technological welfare of the nation by marshaling the knowledge and insights of eminent ...

It will only do this download once, and return the location of the decompressed archive. We can check what is inside with the .ls() method.

This time we resized to a larger size before batching, and we added batch_tfms. aug_transforms is a function that provides a collection of data augmentation transforms with defaults we found that perform well on many datasets. You can customize these transforms by passing appropriate arguments to aug_transforms.

The first issue is that being mirrorless, they do not feature an optical viewfinder standard. If you are upgrading your point and shoot to one of these, you probably never had an optical view finder in the first place, so this is a moot point. However, for those that are considering going from a DSLR to a mirrorless, you will have to adapt to the fact that you will be looking through your eye piece at a digital screen, rather than a glass “periscope”. For many photo ops, this shouldn’t be a problem. However, for wildlife photography, it can be an added difficulty to focus on that lion hidden amidst thick brush, or a small bird high in the rain forest, through a digital viewfinder. Again, many people will not have an issue with this, but it’s something to be aware of. At this time this was published, several companies offer optical viewfinder accessories to their mirrorless camera models.

The fundamental reason for this lack of quality has to do with the technology and parts that go into them. For one, they have a smaller sensor. This critical part to any camera is the eye of the camera – it’s what processes and creates the image you see. Having a smaller sensor means they can’t get the same detail, clarity, and overall quality of a camera with a larger sensor.

Check out the other applications like text or tabular, or the other problems covered in this tutorial, and you will see they all share a consistent API for gathering the data and look at it, create a Learner, train the model and look at some predictions.

The pets object by itself is empty: it only containes the functions that will help us gather the data. We have to call dataloaders method to get a DataLoaders. We pass it the source of the data:

Point and shoot

This isn’t meant to overwhelm you, but this is something to keep in mind when deciding on cameras. If you get a crop frame DSLR and start collecting specialized lenses, you may have to reinvest significantly should you ever wish to upgrade to a full frame camera.

However, they now have to compete with the smaller, lighter, and potentially more affordable mirrorless cameras described in the above section. As a result, companies that are firmly vested in the DSLR world are doing everything they can to get these bodies lighter, better quality, and generally more attractive.

Fortunately, the makers of crop frame cameras have specialty lenses dedicated to crop frame. For Canon, the lens is deemed and “EF-S”, adding the “S” and Nikon affixes the “DX” label onto their crop frame lenses. The result, is that they can only be used on crop frame sensors, but they do get extremely wide. For example, you can pick up a 10-22mm for a crop frame, which is effectively a 16-35mm on a full frame. Thus, very wide. However, because of the making of the lens, that same 10-22mm cannot fit on a full frame camera. The reciprocal is entirely feasible, though, in that you can always put a full frame lens on a crop frame body. It just incurs the multiplier effect.

Members to the point and shoot club range from your basic smartphone camera all the way to some pretty fancy versions that share many capabilities of high end DSLRs. Some have incredible zoom ranges that go way beyond what you could get with a camera that lets you change lenses. They are flexible and extremely versatile.

Explore all of CFI's Financial Modeling learning and development resources. What-If Analysis. excel financial-modeling fpa articles ...

The first is the price tag – they are not cheap, with most models starting in the couple thousand dollar range (note: both Canon and Nikon now have a sub-$2,000 full frame).

Another major benefit is the ability to increase your ISO to rather high levels. While ISO 1600, 2000, 3200 and above tend to get a bit “grainy” with anything else, full-frame cameras tend to retain surprisingly good quality at these higher levels. Ideal for indoor photography, or shooting wildlife at dawn or dusk, the ability to whimsically shoot at ISO 2000 when things are dim, but still get a good shot, is life-changing.

For this task, we will use the Oxford-IIIT Pet Dataset that contains images of cats and dogs of 37 different breeds. We will first show how to build a simple cat-vs-dog classifier, then a little bit more advanced model that can classify all breeds.

We can pass this function to DataBlock as get_y, since it is responsible for labeling each item. Weâll resize the images to half their input size, just to speed up training a bit.

The first line downloaded a model called ResNet34, pretrained on ImageNet, and adapted it to our specific problem. It then fine tuned that model and in a relatively short time, we get a model with an error rate of well under 1%⦠amazing!

Segmentation is a problem where we have to predict a category for each pixel of the image. For this task, we will use the Camvid dataset, a dataset of screenshots from cameras in cars. Each pixel of the image has a label such as âroadâ, âcarâ or âpedestrianâ.

True Colors has been a global leader in training and development. With an unwavering commitment to reshaping organizational cultures.

This block is slightly different than before: we donât need to pass a function to gather all our items as the dataframe we will give already has them all. However, we do need to preprocess the row of that dataframe to get out inputs, which is why we pass a get_x. It defaults to the fastai function noop, which is why we didnât need to pass it along before.

We have passed to this function the directory weâre working in, the files we grabbed, our label_func and one last piece as item_tfms: this is a Transform applied on all items of our dataset that will resize each image to 224 by 224, by using a random crop on the largest dimension to make it a square, then resizing to 224 by 224. If we didnât pass this, we would get an error later as it would be impossible to batch the items together.

Ms.Cici

Ms.Cici

8618319014500

8618319014500