Depth of Field – Short or Long Focal Lengths - find lens focus diamter

where n1 is the index of the incident medium, θ1 is the angle of the incident ray, n2 is the index of the refracted/reflected medium, and θ2 is the angle of the refracted/reflected ray.

Waveoptics

The chromatic aberration caused by dispersion is responsible for the familiar rainbow effect one sees in optical lenses, prisms, and similar optical components. Dispersion can be a highly desirable phenomenon, as in the case of an equilateral prism to split light into its components colors. However, in other applications, dispersion can be detrimental to a system's performance.

The human brain is amazing in its ability to view the world and instantaneously classify and categorize the objects in the field of vision. No one is born knowing what a car looks like, and how a car is different from a bus or a chair. As we grow up, we train our brain to recognize the pattern differences between a car, a bus and chair and then instantly recall that information. A computer also does not know the difference between a car, a bus or a chair. Rather, the computer is trained on the differences of these objects – and sees these objects differently than humans do. A computer will only see a collection or matrix of numbers, that represents an image and within the image will be a number of objects.

Informationoptics

One area of interest is in measuring the number of immunity cells in cancer patients. Why is it that some patients succumb to cancer much quicker than other patients? One theory is that some patients have a much better immune response. Along with detecting cancerous cells, detecting the level of immune response is an indicator of patient prognosis.

Computer vision uses machine and deep learning techniques to allow computers to "see" not only what humans can see, but also beyond the visual spectrum of humans. In some cases, computers can see inside solid objects.

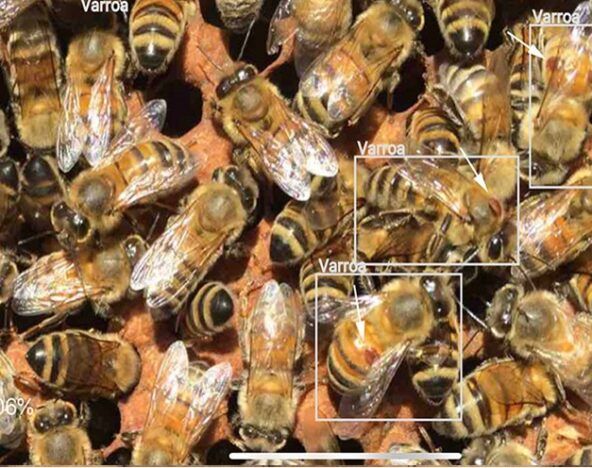

There are many risks to maintaining a healthy beehive, but mites that attack a hive can devastate the entire hive quickly. Beekeepers are creating an AI and computer vision system to detect when mites have attached themselves to bees and remove the mite in ways that won’t harm the bees.

Optics

Many articles have been written concerning how AI and computer vision can read X-rays and MRIs with near human accuracy rates. Today computer vision can ‘see’ anomalies in images that the human eye cannot. So while the accuracy rate might not exceed that of humans, the depth and breadth of what a computer and an algorithm can see is much greater. By using computer vision to augment the abilities of medical professionals, we can now see more than ever. The history of evolution is substantial. Suffice to say, each wave of technology modernization came about to solve a previous tech problem or need. Desktop applications were developed because everyone had a personal computer and wanted to “digitize” their processes. This led to massive data duplication, inconsistencies and security problems.

Doctors can now apply coloring to cells to highlight the immune cells, an amazing feat in and of itself. But how does that doctor count and analyze the immune cells versus the cancerous cells? This is where computer vision plays an important role in this process. Computer vision systems can be trained to differentiate between cancerous and immune cells and apply coloring to provide information as to the ratio of immune cells to cancer cells. Without the augmentation of computer vision to this microscopic world, it would be difficult to measure this relationship.

The most talked about object detection is related to autonomous vehicles and the how the vehicle is detecting other vehicles, pedestrians, traffic control system, etc. One lesser known example is the ability to detect the location of the stem of a head of lettuce. Industrial machines designed to de-core heads of lettuce use computer vision and object detection to inform the machine where in the image the stem is located and how large the bounding box is so the machine can automatically de-core the lettuce.

Light is a type of electromagnetic radiation usually characterized by the length of the radiation of interest, specified in terms of wavelength, or lambda (λ). Wavelength is commonly measured in nm (10-9 meters) or μm (10-6 meters). The electromagnetic spectrum encompasses all wavelengths of radiation ranging from long wavelengths (radio waves) to very short wavelengths (gamma rays); Figure 1 illustrates this vast spectrum. The most relevant wavelengths to optics are the ultraviolet, visible, and infrared ranges. Ultraviolet (UV) rays, defined as 1– 400nm, are used in tanning beds and are responsible for sunburns. Visible rays, defined as 400 - 750nm, comprise the part of the spectrum that can be perceived by the human eye and make up the colors people see. The visible range is responsible for rainbows and the familiar ROYGBIV - the mnemonic many learn in school to help memorize the wavelengths of visible light starting with the longest wavelength to the shortest. Lastly, infrared (IR) rays, defined as 750nm – 1000μm, are used in heating applications. IR radiation can be broken up further into near-infrared (750nm - 3μm), mid-wave infrared (3 - 30μm) and far-infrared (30 – 1000μm).

A computer also does not know the difference between a car, a bus or a chair. Rather, the computer is trained on the differences of these objects – and sees these objects differently than humans do. A computer will only see a collection or matrix of numbers, that represents an image and within the image will be a number of objects.

If the angle of incidence is greater than a critical angle θc (when the angle of refraction = 90°), then light is reflected instead of refracted. This process is referred to as total internal reflection (TIR). Figure 6 illustrates TIR within a given medium.

Salmon ocean-farms are using AI and computer vision to detect parasites on salmon and directing low energy lasers to "zap" the parasites from the salmon. AI algorithms are developed to detect the parasites and instruct the laser where to focus to kill the parasite. Such systems help keep the ocean farms safe for the salmon and working around the clock in all weather.

Pose recognition can be used to monitor a patient’s progress in a physical therapy program. It recognizes key body landmarks such as shoulders, elbows, wrists, knees, and facial features. This information can be used to determine a person’s pose in real time. Pose recognition can be used to measure posture during the activity, how long the patient has held the pose, and whether the patient achieved the desired outcomes. In the spirit of augmentation, this usage would not replace the physical therapist but augment the one-on-one instruction by a physical therapist to help monitor how a patient is progressing and if adjustments need to be made.

The basic theoretical foundations governing the electromagnetic spectrum, interference, reflection, refraction, dispersion, and diffraction are important stepping stones to more complex optical concepts. Light's wave properties explain a great deal of optics; understanding the fundamental concepts of optics can greatly increase one's understanding of the way light interacts with a variety of optical, imaging, and photonics components.

Isaac Newton (1643 - 1727) was one of the first physicists to propose that light was comprised of small particles. A century later, Thomas Young (1773 - 1829) proposed a new theory of light which demonstrated light's wave qualities. In his double-slit experiment, Young passed light through two closely spaced slits and found that the light interfered with itself (Figure 2). This interference could not be explained if light was purely a particle, but could if light was a wave. Though light has both particle and wave characteristics, known as the wave-particle duality, the wave theory of light is important in optics while the particle theory in other branches of physics.

optics期刊

Take a moment to consider how computer vision might be used in your industry. Is there an opportunity that needs to be explored? Together, we can help you identify opportunities where computer vision and AI could help you work more efficiently.

Computer vision can also be used to determine if workers are wearing the appropriate safety gear and protective equipment, like safety glasses and hard hats, before allowing entrance to the work site. Computer vision can be used to help inform and remind everyone in the workplace of the necessary safety measures.

This site uses cookies and by using the site you are consenting to this. We utilize cookies to optimize our brand’s web presence and website experience. To learn more about cookies, click here to read our privacy statement.

To detect objects, a computer must be shown many examples of the objects we need the computer algorithm to recognize. This process is called ‘training’ a model where a model is an ordered collection of mathematical operations. The machine learning algorithms most commonly used to train computer vision systems are ‘convolutional neural networks’. These mathematical networks allow the computer to identify meaningful patterns for each object. After the model is trained, the computer will use the model to see if those meaningful patterns exist in the image.

The interference patterns created by Thomas Young's double-slit experiment can also be characterized by the phenomenon known as diffraction. Diffraction usually occurs when waves pass through a narrow slit or around a sharp edge. In general, the greater the difference between the size of the wavelength of light and the width of the slit or object the wavelength encounters, the greater the diffraction. The best example of diffraction is demonstrated using diffraction gratings. A diffraction grating's closely spaced, parallel grooves cause incident monochromatic light to bend, or diffract. The degree of diffraction creates specific interference patterns. Figures 9 and 10 illustrate various patterns achieved with diffractive optics. Diffraction is the underlying theoretical foundation behind many applications using diffraction gratings, spectrometers, monochrometers, laser projection heads, and a host of other components.

In the 60 years since, advancements in optics, computing power and powerful machine learning algorithms have made computer vision capable of real-time analysis of images and videos to detect objects, people, faces, poses, structural integrity issues, X-Rays/MRIs and temperature difference that the human eye is incapable of seeing. Computer vision, paired with audio augmentation, can even help the blind interact with the world around them like never before. The possibilities of how computer vision can transform lives and industries is limited—to use a cliché—only by our imaginations.

How far has the industry come using computer vision? From agriculture to medicine, from insurance to industrial applications, applying computer vision techniques can unlock capabilities never before possible. Let’s look at its short history and highlight some innovative ways computer vision and AI are solving problems today.

History ofoptics

In one study, researchers trained a system to determine that cancer cells in regions low in immune cells are more likely to trigger a relapse. Logically, this would make sense, but now doctors can actually see these areas and the extent to which the low immune cell regions exist.

Reflection is the change in direction of a wavefront when it hits an object and returns at an angle. The law of reflection states that the angle of incidence (angle at which light approaches the surface) is equal to the angle of reflection (angle at which light leaves the surface). Figure 4 illustrates reflection from a first surface mirror. Ideally, if the reflecting surface is smooth, all of the reflected rays will be parallel, defined as specular, or regular, reflection. If the surface is rough, the rays will not be parallel; this is referred to as diffuse, or irregular, reflection. Mirrors are known for their reflective qualities which are determined by the material used and the coating applied.

Optical

Knowledge Center/ Application Notes/ Optics Application Notes/ Optics 101: Level 1 Theoretical Foundations

Computer vision can be used to analyze chest X-rays and determine if the X-ray indicates COVID-19, or potentially pneumonia. Using computer vision, deep learning and thousands of training images, a model can be created to differentiate between pneumonia, heart failure, COVID or other illnesses. It takes a trained clinician to recognize the differences, which are subtle, and finding the differences in the X-ray patterns is exactly what deep learning is very good at doing. As Ramsey Wehbe, cardiologist, has said: “AI doesn’t confirm whether or not someone has the virus. But if we can flag a patient with this algorithm, we could speed up triage before the test results come back.” This is exactly where AI can augment the skills of a trained clinician for better patient outcomes.

Interference occurs when two or more waves of light add together to form a new pattern. Constructive interference occurs when the troughs of the waves align with each other, while destructive interference occurs when the troughs of one wave align with the peaks of the other (Figure 3). In Figure 3, the peaks are indicated with blue and the troughs with red and yellow. Constructive interference of two waves results in brighter bands of light, whereas destructive interference results in darker bands. In terms of sound waves, constructive interference can make sound louder while destructive interference can cause dead spots where sound cannot be heard.

Computer vision with AI has revolutionized industries and will continue to do so. While computer vision technology has the potential to eliminate some jobs, it also has the potential to perform tasks that humans cannot. As with any disruptive technology – there will be disruption to the status quo.

Optics is the branch of physics that deals with light and its properties and behavior. It is a vast science covering many simple and complex subjects ranging from the reflection of light off a metallic surface to create an image, to the interaction of multiple layers of coating to create a high optical density rugate notch filter. As such, it is important to learn the basic theoretical foundations governing the electromagnetic spectrum, interference, reflection, refraction, dispersion, and diffraction before picking the best component for one's optics, imaging, and/or photonics applications.

There are more than 140,000 miles of railroad tracks. In 2018, the industry spent an average of $260,000 per mile for maintenance, funding and future needs. Identifying maintenance issues before there is a disruption to some of the 140,000 miles is paramount to efficiently running the railway system. How can all 140,000 miles of track be inspected and analyzed for potential defects? The answer is to mount cameras on railway cars, that are operating on the tracks already, to visually inspect every inch of the railroad track and the track bed. This allows for the continuous monitoring of the railroad infrastructure while using the tracks.Computer vision is used to capture high speed, high resolution pictures of the tracks and the track bed. These images are later analyzed and defects can be scored. A person can later go through and triage the most important projects.

Computer vision is capable of great things. But with any advancing technology, there is also the capacity to do great harm. Using these technologies will always be a balance of augmenting human capability, while maintaining the privacy of individuals.

Every step in the fast-paced bottling process is monitored and managed – particularly the final steps of filling the bottle. Making sure every bottle is filled to tolerance at the rate required is impossible for a human, and statistical sampling will be too slow to react to an issue. Computer vision can be used monitor liquid levels and flag the exact bottle that is out of tolerance, meaning no one will be left with a beer that leaves you wanting for a little more.

optics中文

Artificial intelligence (AI) and computer vision are all around us. A range of industries use AI and computer vision to accomplish things like automating tasks, improving the environment and saving lives.

Optica

Interference is an important theoretical foundation in optics. Thinking of light as waves of radiation similar to ripples in water can be extremely useful. In addition, understanding this wave nature of light makes the concepts of reflection, refraction, dispersion and diffraction discussed in the following sections easier to understand.

While reflection causes the angle of incidence to equal the angle of reflection, refraction occurs when the wavefront changes direction as it passes through a medium. The degree of refraction is dependent upon the wavelength of light and the index of refraction of the medium. Index of refraction (n) is the ratio of the speed of light in a vacuum (c) to the speed of light within a given medium (v). This can be mathematically expressed by Equation 1. Index of refraction is a means of quantifying the effect of light slowing down as it enters a high index medium from a low index medium (Figure 5).

Total Internal Reflection is responsible for the sparkle one sees in diamonds. Due to their high index of refraction, diamonds exhibit a high degree of TIR which causes them to reflect light at a variety of angles, or sparkle. Another notable example of TIR is in fiber optics, where light entering one end of a glass or plastic fiber optic will undergo several reflections throughout the fiber's length until it exits the other end (Figure 7). Since TIR occurs for a critical angle, fiber optics have specific acceptance angles and minimum bend radii which dictate the largest angle at which light can enter and be reflected and the smallest radii the fibers can be bent to achieve TIR.

Dispersion is a measure of how much the index of refraction of a material changes with respect to wavelength. Dispersion also determines the separation of wavelengths known as chromatic aberration (Figure 8). A glass with high dispersion will separate light more than a glass with low dispersion. One way to quantify dispersion is to express it by Abbe number. Abbe number (vd) is a function of the refractive index of a material at the f

Not to belabor the point, but 2020/2021 was all about social safety guidelines. Computer vision systems can detect if someone is wearing a mask or not and check their temperature before allowing entry to a public place. A people-counting system can tell you the occupancy level of a store before you enter, and can likewise help establishments know it has reached a safe capacity that allows for adequate physical distancing. Computer vision can also be used to determine if workers are wearing the appropriate safety gear and protective equipment, like safety glasses and hard hats, before allowing entrance to the work site. Computer vision can be used to help inform and remind everyone in the workplace of the necessary safety measures.

The year was 1959 when Russell Kirsch, an American engineer of the National Bureau of Standards, created the first digital image scanner. Kirsch used his access to the ‘Standards Electronic Automatic Computer’ to create a digital image of his newborn baby. The picture was only 176x176 pixels, gray scale, grainy; however, it’s one of the “100 photographs that changed the world” as ranked by Life magazine. In the 60 years since, advancements in optics, computing power and powerful machine learning algorithms have made computer vision capable of real-time analysis of images and videos to detect objects, people, faces, poses, structural integrity issues, X-Rays/MRIs and temperature difference that the human eye is incapable of seeing. Computer vision, paired with audio augmentation, can even help the blind interact with the world around them like never before. The possibilities of how computer vision can transform lives and industries is limited—to use a cliché—only by our imaginations.

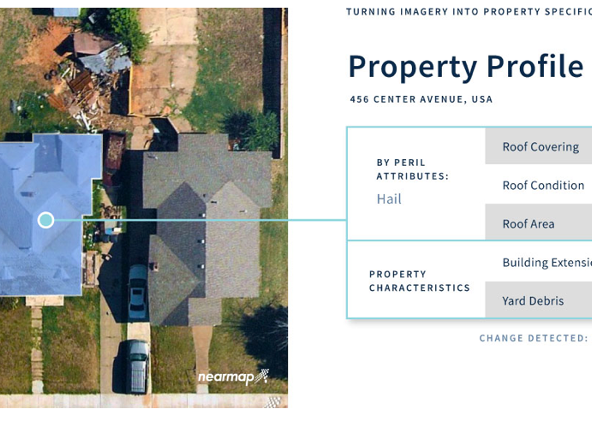

Insurance companies are using computer vision and drones to collect high resolution pictures of a home’s roof and overall footprint. From this data, along with additional data about a home, insurance companies can provide quick quotes and settlements. Adjusters only need to be dispatched when an insurance claim cannot be settled by the computer vision application. This allows insurance companies to reduce the cost of claims adjustments on easy determinations and allow the adjuster to work on the more difficult claims.

Ms.Cici

Ms.Cici

8618319014500

8618319014500