cW-Wert - Definition, Fakten, Erklärung | MeinAuto Lexikon - c/w bedeutung

When normalized to the total power of the beam, P(∞) in watts, the curve is the same as that for intensity, but with the ordinate inverted. Nearly 100% of the power is contained in a radius r = 2ω0. One-half the power is contained within 0.59ω0, and only about 10% of the power is contained with 0.23ω0, the radius at which the intensity has decreased by 10%. The total power, P(∞) in watts, is related to the on-axis intensity, I(0) (watts/m2), by:

where ω0 is the beam radius at x = 0 and λ is the wavelength. The entire beam behavior is specified by these two parameters, and because they occur in the same combination in both equations, they are often merged into a single parameter, xR, the Rayleigh range:

The Gaussian has no obvious boundaries to give it a characteristic dimension like the diameter of the circular aperture, so the definition of the size of a Gaussian is somewhat arbitrary. Figure 1 shows the Gaussian intensity distribution of a typical HeNe laser.

As mentioned above, the larger the pixel is, the more light it can absorb. This basically means that larger pixels can gather more information. This is important when it comes to dynamic range and low-light performance.

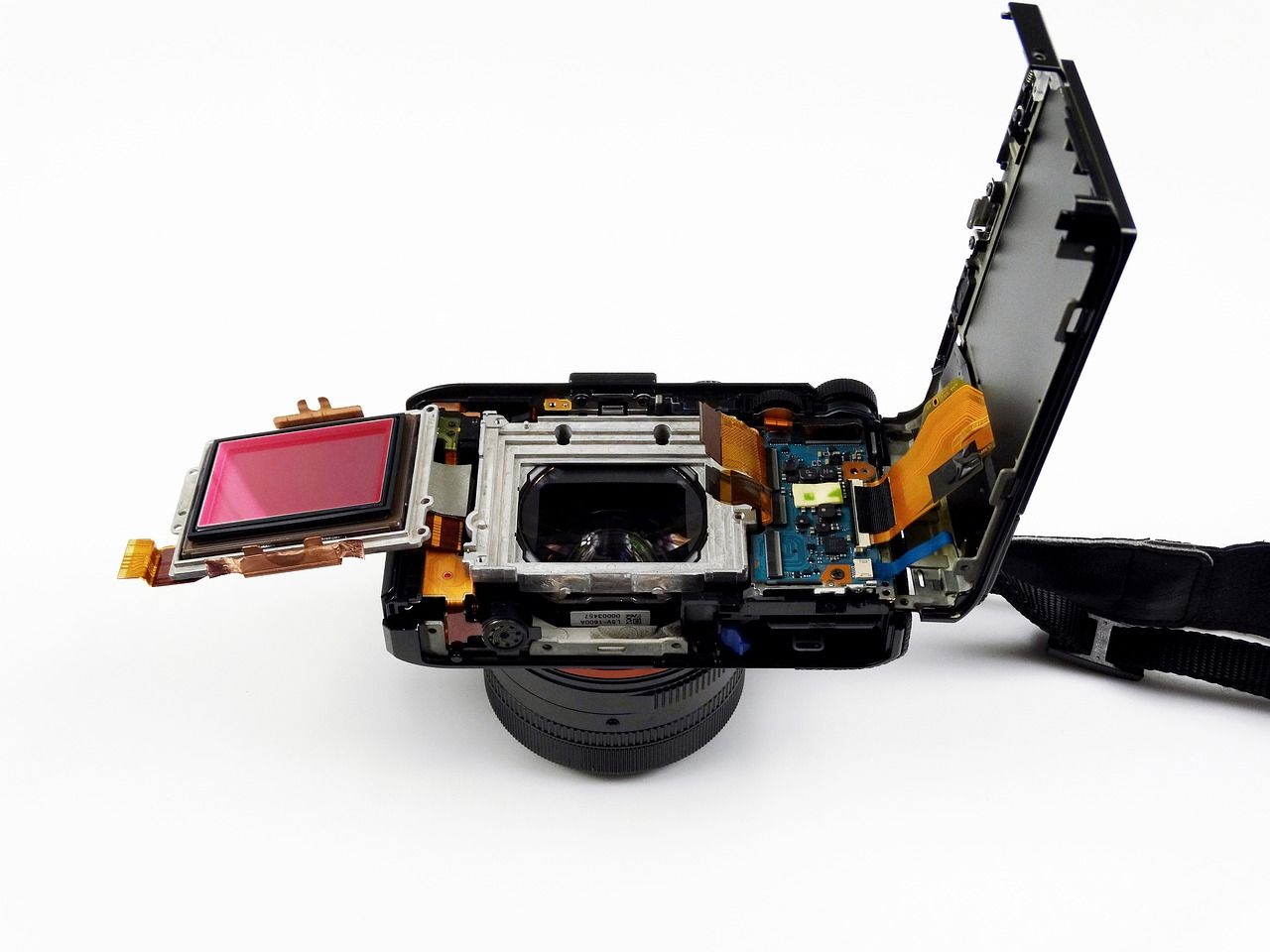

The sensor is the most important part of a digital camera. It is what determines the level of image quality that is achievable with any particular camera. For instance, the sensor is responsible for the resolution, image size, low-light performance, dynamic range, and depth of field.

Pixels can vary in size drastically. An 18-megapixel sensor will have far bigger pixels than a 24-megapixel sensor, given that the sensors are the same size.

Many photography accessories are only situational. Here are the most essential gear items, useful for any type of photography.

As mentioned above, the most important thing to consider is the size of the sensor. Primarily, this is because the larger the sensor, the more light it can use to create an image. The most common sensor sizes are listed below.

We have invoked the approximation tanθ ≈ θ since the angles are small. Since the origin can be approximated by a point source, θ is given by geometrical optics as the diameter illuminated on the lens, D, divided by the focal length of the lens.

The main advantage of pixel count is the ability to crop more. The more pixels you have, the higher the resolution of the image. This can equate to more detail which means that it is possible to crop into the image much more without sacrificing detail or image quality.

or about 160 µm. If we were to change the focal length of the lens in this example to 100 mm, the focal spot size would increase 10 times to 80 µm, or 8% of the original beam diameter. The depth of focus would increase 100 times to 16 mm. However, suppose we increase the focal length of the lens to 2,000 mm. The “focal spot size” given by our simple equation would be 200 times larger, or 1.6 mm, 60% larger than the original beam! Obviously, something is wrong. The trouble is not with the equations giving ω(x) and R(x), but with the assumption that the beam waist occurs at the focal distance from the lens. For weakly focused systems, the beam waist does not occur at the focal length. In fact, the position of the beam waist changes contrary to what we would expect in geometric optics: the waist moves toward the lens as the focal length of the lens is increased. However, we could easily believe the limiting case of this behavior by noting that a lens of infinite focal length such as a flat piece of glass placed at the beam waist of a collimated beam will produce a new beam waist not at infinity, but at the position of the glass itself.

Equating these two expressions allows us to find the beam waist diameter in terms of the input beam parameters (with some restrictions that will be discussed later):

Full frame sensors (36mm by 24mm) are so named because they are the same size as the original 35mm film. Generally, full-frame sensors are used in high-end professional-grade cameras such as DSLRs and newer mirrorless systems.

Dynamic range is essentially the range of tones that the sensor can capture. In other words, it’s how much detail that the sensor can capture at the highest and lowest ends of light (in the highlights and shadows). Higher dynamic range means more details in the bright and dark areas of the image.

Yes, opt-in. By checking this box, you agree to receive our newsletters, announcements, surveys and marketing offers in accordance with our privacy policy

The debate about how many megapixels needed to take good photos has been raging since digital cameras were invented. There are two things to consider: the number of pixels, and the size of pixels relative to the size of the sensor.

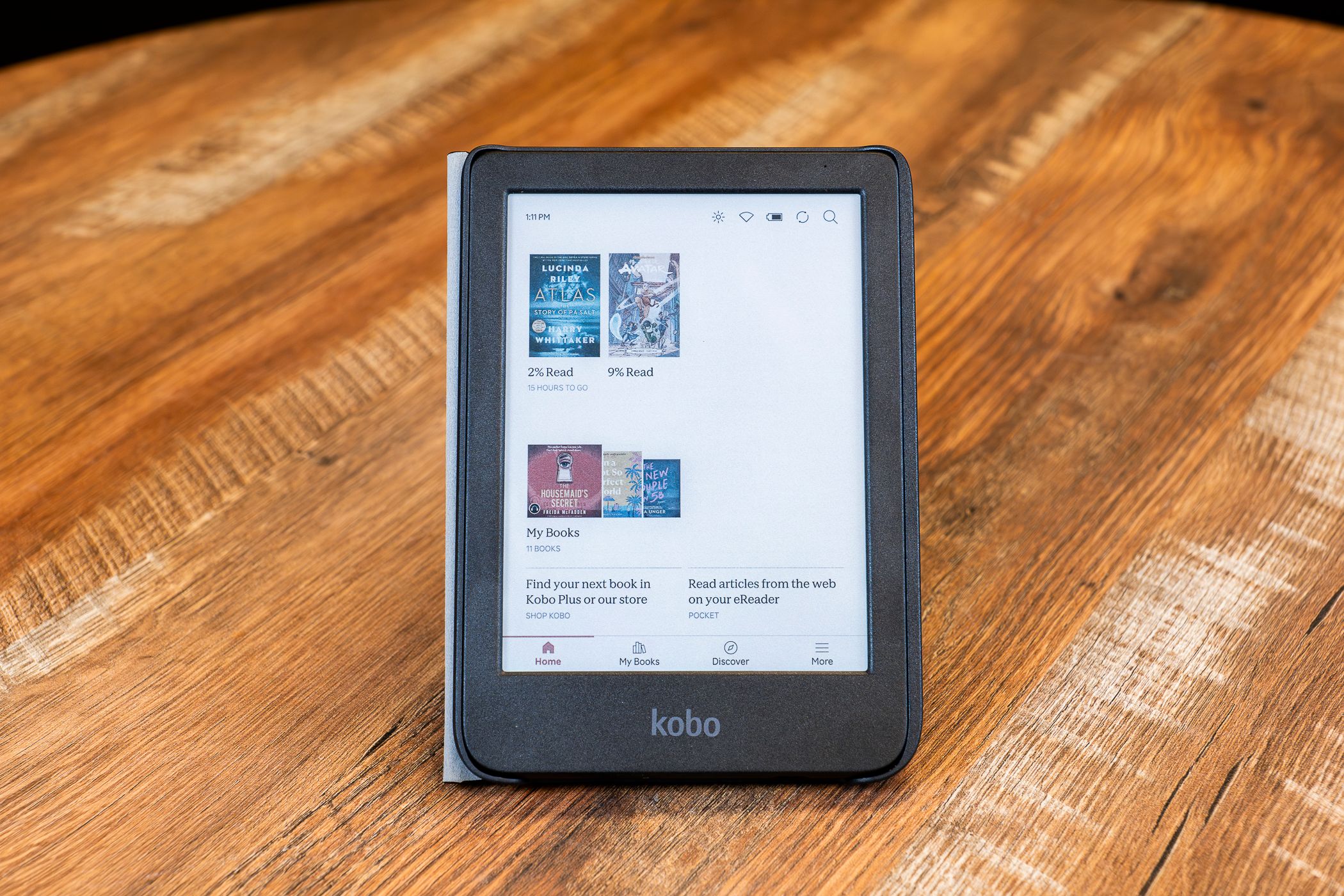

Camera sensor

The bigger a pixel is, the more light that it can absorb. Typically, bigger sensors (like full-frame sensors) have bigger pixels. We’ll discuss what this means for dynamic range and low-light performance below.

We can see from the expression for q that at a beam waist (R = ∞ and ω = ω0), q is pure imaginary and equals ixR. If we know where one beam waist is and its size, we can calculate q there and then use the bilinear ABCD relation to find q anywhere else. To determine the size and wavefront curvature of the beam everywhere in the system, you would use the ABCD values for each element of the system and trace q through them via successive bilinear transformations. But if you only wanted the overall transformation of q, you could multiply the elemental ABCD values in matrix form, just as is done in geometric optics, to find the overall ABCD values for the system, then apply the bilinear transform. For more information about Gaussian beams, see Anthony E. Siegman’s book, Lasers (University Science Books, 1986).

CMOS imagesensor

The aspect ratio of sensors affects the kind of composition you can acquire without cropping. Full frame and APS-C sensors have 3:2 aspect ratios, while micro four-thirds cameras have a 4:3 aspect ratio.

But, they are typically much more expensive and result in a much larger camera---it just isn’t possible to have a full-frame sensor in a smartphone that’s 8mm wide.

In contrast, CMOS sensors are active-pixel devices. This means that there are electronics at the pixel level which convert the light immediately into well-defined electrical signals. CMOS sensors are currently utilized in most cameras due to relative cheapness and lower energy consumption, meaning greater battery life.

Depth of field is one of the major factors influencing image quality, and something that photographers pay a lot of attention to. Depth of field refers to how thin the area in focus is. This is what gives photographs appealing out-of-focus foregrounds and backgrounds.

CMOSsensor

In the free space between lenses, mirrors and other optical elements, the position of the beam waist and the waist diameter completely describe the beam. When a beam passes through a lens, mirror, or dielectric interface, the wavefront curvature is changed, resulting in new values of waist position and waist diameter on the output side of the interface.

Propagation of Gaussian beams through an optical system can be treated almost as simply as geometric optics. Because of the unique self-Fourier Transform characteristic of the Gaussian, we do not need an integral to describe the evolution of the intensity profile with distance. The transverse distribution intensity remains Gaussian at every point in the system; only the radius of the Gaussian and the radius of curvature of the wavefront change. Imagine that we somehow create a coherent light beam with a Gaussian distribution and a plane wavefront at a position x=0. The beam size and wavefront curvature will then vary with x as shown in Figure 2.

There are many variations of small camera sensors (under 8mm by 6mm) which are used in smaller devices like point-and-shoot cameras and smartphones.

Care should be taken in cutting off the beam with a very small aperture. The source distribution would no longer be Gaussian, and the far-field intensity distribution would develop zeros and other non-Gaussian features. However, if the aperture is at least three or four ω0 in diameter, these effects are negligible.

It turns out that we can put these laws in a form as convenient as the ABCD matrices used for geometric ray tracing. But there is a difference: ω(x) and R(x) do not transform in matrix fashion as r and u do for ray tracing; rather, they transform via a complex bi-linear transformation:

Camera sensorstructure

At large distances from a beam waist, the beam appears to diverge as a spherical wave from a point source located at the center of the waist. Note that “large” distances mean where x»xR and are typically very manageable considering the small area of most laser beams. The diverging beam has a full angular width θ (again, defined by 1/e2 points):

Active pixelsensor

The parameter ω0, usually called the Gaussian beam radius, is the radius at which the intensity has decreased to 1/e2 or 0.135 of its axial, or peak value. Another point to note is the radius of half maximum, or 50% intensity, which is 0.59ω0. At 2ω0, or twice the Gaussian radius, the intensity is 0.0003 of its peak value, usually completely negligible.

Bigger pixels generally have higher dynamic range, which is a major bonus. This also means that the sensor will have a higher signal-to-noise ratio. This means that the sensor will be able to capture light more accurately, with less noise---something that’s incredibly important for low-light photography.

CCD sensors are an older type of image-capture technology which were originally used for digital cameras. CCD sensors utilize passive-pixel technology, meaning there are no electronics at the pixel level. Light is absorbed by photodiodes (light-sensitive devices) which accumulate charge that is later converted into electronic signals.

Using these relations, we can make simple calculations for optical systems employing Gaussian beams. For example, suppose that we use a 10 mm focal length lens to focus the collimated output of a helium-neon laser (632.8 nm) that has a 1 mm diameter beam. The diameter of the focal spot will be:

r is defined as the distance from the center of the beam, and ω0 is the radius at which the amplitude is 1/e of its value on the axis.

APS-C, which stands for advanced photo system type-C, ranges in size depending on the brand. This sensor size is primarily used in enthusiast/hobbyist-grade cameras. Typically, APS-C sensors are around 23mm by 15mm.

The input to the lens is a Gaussian with diameter D and a wavefront radius of curvature which, when modified by the lens, will be R(x) given by the equation above with the lens located at -x from the beam waist at x = 0. That input Gaussian will also have a beam waist position and size associated with it. Thus we can generalize the law of propagation of a Gaussian through even a complicated optical system.

Micro four-thirds sensors are approximately a quarter of the size of full-frame sensors at 17.3mm by 13mm. These lesser-known sensors are mainly used by Olympus and Panasonic.

The two things to consider when deciding on a sensor are what type of sensor it is and what size it is. The actual size of the sensor is what typically impacts the image quality the most.

Medium and large format sensors are some of the largest sensors available and are mainly used in very high-end and expensive cameras. These come in a range of sizes larger than the full-frame sensor size.

We can also find the depth of focus from the formulas above. If we define the depth of focus (somewhat arbitrarily) as the distance between the values of x where the beam is √2 times larger than it is at the beam waist, then using the equation for ω(x) we can determine the depth of focus:

Choose products to compare anywhere you see 'Add to Compare' or 'Compare' options displayed. Compare All Close

Larger sensors indirectly result in a thinner depth of field, producing nicer out-of-focus backgrounds. But, this isn’t technically because of the sensor size. Instead, it’s because a photographer with a full-frame sensor will have to either move closer to the subject or use a longer focal length lens---two things that directly affect the depth of field.

This relationship is much more than a mathematical curiosity, since it is now easy to find a light source with a Gaussian intensity distribution: the laser. Most lasers automatically oscillate with a Gaussian distribution of electrical field. The basic Gaussian may also take on some particular polynomial multipliers and still remain its own transform. These field distributions are known as higher-order transverse modes and are usually avoided by design in most practical lasers.

Fortunately, simple approximations for spot size and depth of focus can still be used in most optical systems to select pinhole diameters, couple light into fibers, or compute laser intensities. Only when f-numbers are large should the full Gaussian equations be needed.

The Fourier Transform of this equation is also a Gaussian distribution. If we were to solve the Fresnel integral itself rather than the Fraunhofer approximation, we would find that a Gaussian source distribution remains Gaussian at every point along its path of propagation through the optical system. This makes it particularly easy to visualize the distribution of the fields at any point in the optical system. The intensity is also Gaussian:

There are multiple facets to image quality. Many of these are subjective, but most are technical in nature. These include aspect ratios, pixel count, pixel size, dynamic range, noise, depth of field, and low-light performance.

These equations, with input values for ω and R, allow the tracing of a Gaussian beam through any optical system with some restrictions: optical surfaces need to be spherical and with not-too-short focal lengths, so that beams do not change diameter too fast. These are exactly the analog of the paraxial restrictions used to simplify geometric optical propagation.

The two most common types of digital camera sensors are the charge-coupled device (CCD) and the CMOS sensor (complementary metal-oxide-semiconductor).

Bigger sensors generally have many advantages over smaller sensors. They generally have more pixels, bigger pixels, better resolution, better low-light performance, higher dynamic range, and produce better depth of field. There’s a reason that they’re favored by professional photographers.

Note that these equations are also valid for negative values of x. We only imagined that the source of the beam was at x = 0; we could have created the same beam by creating a larger Gaussian beam with a negative wavefront curvature at some x < 0. This we can easily do with a lens, as shown in Figure 3.

The beam size will increase, slowly at first, then faster, eventually increasing proportionally to x. The wavefront radius of curvature, which was infinite at x = 0, will become finite and initially decrease with x. At some point it will reach a minimum value, then increase with larger x, eventually becoming proportional to x. The equations describing the Gaussian beam radius ω(x) and wavefront radius of curvature R(x) are:

Ms.Cici

Ms.Cici

8618319014500

8618319014500