Optical Biology Core Facility – A beautiful way to IMAGE the ... - optical core

Selecting the appropriate field of view for security cameras is essential for an effective surveillance system. So why is the field of view so crucial for security camera selection? This part may give you the answer.

The heart of any camera is the sensor; modern sensors are solid-state electronic devices containing up to millions of discrete photodetector sites called pixels. Although there are many camera manufacturers, the majority of sensors are produced by only a handful of companies. Still, two cameras with the same sensor can have very different performance and properties due to the design of the interface electronics. In the past, cameras used phototubes such as Vidicons and Plumbicons as image sensors. Though they are no longer used, their mark on nomenclature associated with sensor size and format remains to this day. Today, almost all sensors in machine vision fall into one of two categories: Charge-Coupled Device (CCD) and Complementary Metal Oxide Semiconductor (CMOS) imagers.

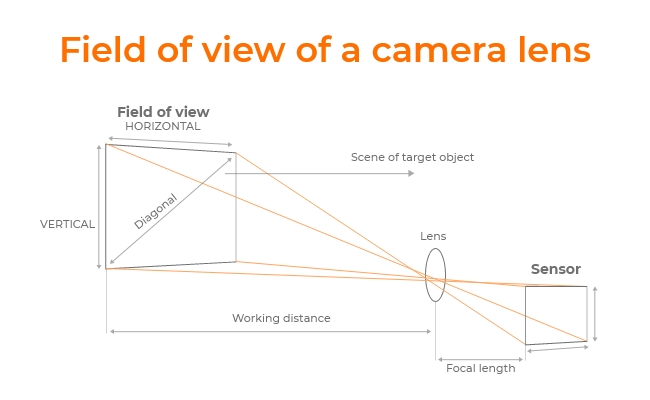

The field of view of a camera or optical instrument depends on the focal length of the lens or eyepiece, the sensor size (for digital cameras), and the distance between the lens or eyepiece and the object of interest. Here is a simplified formula:

CCD and CMOS sensors are sensitive to wavelengths from approximately 350 - 1050nm, although the range is usually given from 400 - 1000nm. This sensitivity is indicated by the sensor’s spectral response curve (Figure 8). Most high-quality cameras provide an infrared (IR) cut-off filter for imaging specifically in the visible spectrum. These filters are sometimes removable for near-IR imaging.

The most basic component of a camera system is the sensor. The type of technology and features greatly contributes to the overall image quality, therefore knowing how to interpret camera sensor specifications will ultimately lead to choosing the best imaging optics to pair with it. To learn more about imaging electronics, view our additional imaging electronics 101 series pertaining to camera resolution, camera types, and camera settings.

At even longer wavelengths than SWIR, thermal imaging becomes dominant. For this, a microbolometer array is used for its sensitivity in the 7 - 14μm wavelength range. In a microbolometer array, each pixel has a bolometer which has a resistance that changes with temperature. This resistance change is read out by conversion to a voltage by electronics in the substrate (Figure 3). These sensors do not require active cooling, unlike many infrared imagers, making them quite useful.

Sensorsizecomparison

For video games and virtual reality (VR) experience, the field of view is the extent of the in-game environment that can be seen on the screen. Some of these parameters can be adjusted to affect the perception of speed, realism, and the player's spatial awareness. A wider FOV can give a more immersive feeling but might require more computing power to render.

Imaging electronics, in addition to imaging optics, play a significant role in the performance of an imaging system. Proper integration of all components, including camera, capture board, software, and cables results in optimal system performance. Before delving into any additional topics, it is important to understand the camera sensor and key concepts and terminology associated with it.

Visual experiences play significant roles in daily life thanks to the rapid development of different digital devices. Whether through the lens of a camera, the eye of a microscope, or the immersive screens of our favorite video games, the concept of "Field of View (FoV)" dramatically impacts how people see the world. This article will help you understand the FOV meaning, its practical applications, and how it can significantly impact the performance and coverage of your security cameras.

One way to increase the readout speed of a camera sensor is to use multiple taps on the sensor. This means that instead of all pixels being read out sequentially through a single output amplifier and ADC, the field is split and read to multiple outputs. This is commonly seen as a dual tap where the left and right halves of the field are readout separately. This effectively doubles the frame rate, and allows the image to be reconstructed easily by software. It is important to note that if the gain is not the same between the sensor taps, or if the ADCs have slightly different performance, as is usually the case, then a division occurs in the reconstructed image. The good news is that this can be calibrated out. Many large sensors which have more than a few million pixels use multiple sensor taps. This, for the most part, only applies to progressive scan digital cameras; otherwise, there will be display difficulties. The performance of a multiple tap sensor depends largely on the implementation of the internal camera hardware.

Camera sensor

The complementary metal oxide semiconductor (CMOS) was invented in 1963 by Frank Wanlass. However, he did not receive a patent for it until 1967, and it did not become widely used for imaging applications until the 1990s. In a CMOS sensor, the charge from the photosensitive pixel is converted to a voltage at the pixel site and the signal is multiplexed by row and column to multiple on chip digital-to-analog converters (DACs). Inherent to its design, CMOS is a digital device. Each site is essentially a photodiode and three transistors, performing the functions of resetting or activating the pixel, amplification and charge conversion, and selection or multiplexing (Figure 2). This leads to the high speed of CMOS sensors, but also low sensitivity as well as high fixed-pattern noise due to fabrication inconsistencies in the multiple charge to voltage conversion circuits.

The multilayer MOS fabrication process of a CMOS sensor does not allow for the use of microlenses on the chip, thereby decreasing the effective collection efficiency or fill factor of the sensor in comparison with a CCD equivalent. This low efficiency combined with pixel-to-pixel inconsistency contributes to a lower signal-to-noise ratio and lower overall image quality than CCD sensors. Refer to Table 1 for a general comparison of CCD and CMOS sensors.

In photography and videography, the field of view is the angular extent of the observable scene that a lens or a camera can capture. It's often expressed in degrees or radians. A wider field of view means that more of the set is visible, useful for capturing landscapes or large groups of people. A narrower field of view is often used for telephoto shots or when photographers or video directors want to focus on a more minor subject.

The charge-coupled device (CCD) was invented in 1969 by scientists at Bell Labs in New Jersey, USA. For years, it was the prevalent technology for capturing images, from digital astrophotography to machine vision inspection. The CCD sensor is a silicon chip that contains an array of photosensitive sites (Figure 1). The term charge-coupled device actually refers to the method by which charge packets are moved around on the chip from the photosites to readout, a shift register, akin to the notion of a bucket brigade. Clock pulses create potential wells to move charge packets around on the chip, before being converted to a voltage by a capacitor. The CCD sensor is itself an analog device, but the output is immediately converted to a digital signal by means of an analog-to-digital converter (ADC) in digital cameras, either on or off chip. In analog cameras, the voltage from each site is read out in a particular sequence, with synchronization pulses added at some point in the signal chain for reconstruction of the image.

In contrast to global and rolling shutters, an asynchronous shutter refers to the triggered exposure of the pixels. That is, the camera is ready to acquire an image, but it does not enable the pixels until after receiving an external triggering signal. This is opposed to a normal constant frame rate, which can be thought of as internal triggering of the shutter.

One issue that often arises in imaging applications is the ability of an imaging lens to support certain sensor sizes. If the sensor is too large for the lens design, the resulting image may appear to fade away and degrade towards the edges because of vignetting (extinction of rays which pass through the outer edges of the imaging lens). This is commonly referred to as the tunnel effect, since the edges of the field become dark. Smaller sensor sizes do not yield this vignetting issue.

In microscopy, the field of view refers to the area visible through the microscope's eyepiece or on a digital display. The field of view can vary depending on the objective lens used, with higher magnification objectives typically having a smaller field of view.

CMOS sensors are, in general, more sensitive to IR wavelengths than CCD sensors. This results from their increased active area depth. The penetration depth of a photon depends on its frequency, so deeper depths for a given active area thickness produces less photoelectrons and decreases quantum efficiency.

If you've chosen a particular field of view, you can place the device strategically to optimize its effectiveness. Here are some placement tips you can follow.

Several factors can affect the optical instrument's field of view. Depending on various factors, users can strategically choose the correct FOV for different applications.

To compensate for the low well depth in the CCD, microlenses are used to increase the fill factor, or effective photosensitive area, to compensate for the space on the chip taken up by the charge-coupled shift registers. This improves the efficiency of the pixels, but increases the angular sensitivity for incoming light rays, requiring that they hit the sensor near normal incidence for efficient collection.

1inchsensor

DXOMARK

Astronomy enthusiasts and scientists often use telescopes or binoculars to observe the infinite sky. For astronomical devices, the field of view describes the area of the sky that can be observed through a telescope or binoculars. It is often measured in degrees, and a larger field of view allows for a broader view of celestial objects. A narrower field of view helps focus on specific astronomical objects.

Editor from Reolink. Interested in new technology trends and willing to share tips about home security. Her goal is to make security cameras and smart home systems easy to understand for everyone.

The size of the image sensor directly affects how the camera captures and records the light coming through the lens, which in turn impacts the FOV. Large image sensors will allow more light to pass through than smaller sensors. Smaller sensors tend to result in a narrower FOV and can magnify the image, while larger sensors provide a wider FOV.

The term "field of view" can be found in different scenarios. This parameter has become a fundamental element for enhanced visual experience, from photography and videography to surveillance and immersive technologies. With the knowledge gained from this article, we truly hope you can harness the power of the field of view in the future!

The multiplexing configuration of a CMOS sensor is often coupled with an electronic rolling shutter; although, with additional transistors at the pixel site, a global shutter can be accomplished wherein all pixels are exposed simultaneously and then readout sequentially. An additional advantage of a CMOS sensor is its low power consumption and dissipation compared to an equivalent CCD sensor, due to less flow of charge, or current. Also, the CMOS sensor’s ability to handle high light levels without blooming allows for its use in special high dynamic range cameras, even capable of imaging welding seams or light filaments. CMOS cameras also tend to be smaller than their digital CCD counterparts, as digital CCD cameras require additional off-chip ADC circuitry.

The sensor aspect ratio is the sensor's width-to-height ratio. The sensor aspect ratio can affect the field of view (FOV) and the composition of an image by determining how much of the scene is captured horizontally and vertically, especially when using lenses designed for a specific aspect ratio. A camera with a 3:2 or 16:9 aspect ratio captures a slightly wider FOV than a 4:3 aspect ratio.

The field of view (FOV) refers to the extent of the observable world or scene that can be seen at a given moment through a particular device, such as a camera, microscope, binoculars, or the human eye. It defines the area or angle visible within the observation frame. In the case of optical devices, FoV is the maximum area that the device can capture. The broader the FOV, the more people can see, whether they're looking through a camera lens or at a screen.

In digital cameras, pixels are typically square. Common pixel sizes are between 3 - 10μm. Although sensors are often specified simply by the number of pixels, the size is very important to imaging optics. Large pixels have, in general, high charge saturation capacities and high signal-to-noise ratios (SNRs). With small pixels, it becomes fairly easy to achieve high resolution for a fixed sensor size and magnification, although issues such as blooming become more severe and pixel crosstalk lowers the contrast at high spatial frequencies. A simple measure of sensor resolution is the number of pixels per millimeter.

The frame rate refers to the number of full frames (which may consist of two fields) composed in a second. For example, an analog camera with a frame rate of 30 frames/second contains two 1/60 second fields. In high-speed applications, it is beneficial to choose a faster frame rate to acquire more images of the object as it moves through the FOV.

The focal length of a camera lens can affect the field of view because it determines how light rays are refracted and focused onto the image sensor (or film plane) within the camera. When light rays pass through a lens, their paths are bent due to their curved shape. These rays can either converge or diverge. The extent to which they converge or diverge depends on the curvature of the lens.

Analog CCD cameras have rectangular pixels (larger in the vertical dimension). This is a result of a limited number of scanning lines in the signal standards (525 lines for NTSC, 625 lines for PAL) due to bandwidth limitations. Asymmetrical pixels yield higher horizontal resolution than vertical. Analog CCD cameras (with the same signal standard) usually have the same vertical resolution. For this reason, the imaging industry standard is to specify resolution in terms of horizontal resolution.

The main difference between the field of view and depth of view is that the field of view is about the extent of what is seen, while DoV is about the range of distances at which objects appear in focus within that scene.

Until a few years ago, CCD cameras used electronic or global shutters, and all CMOS cameras were restricted to rolling shutters. A global shutter is analogous to a mechanical shutter, in that all pixels are exposed and sampled simultaneously, with the readout then occurring sequentially; the photon acquisition starts and stops at the same time for all pixels. On the other hand, a rolling shutter exposes, samples, and reads out sequentially; it implies that each line of the image is sampled at a slightly different time. Intuitively, images of moving objects are distorted by a rolling shutter; this effect can be minimized with a triggered strobe placed at the point in time where the integration period of the lines overlaps. Note that this is not an issue at low speeds. Implementing global shutter for CMOS requires a more complicated architecture than the standard rolling shutter model, with an additional transistor and storage capacitor, which also allows for pipelining, or beginning exposure of the next frame during the readout of the previous frame. Since the availability of CMOS sensors with global shutters is steadily growing, both CCD and CMOS cameras are useful in high-speed motion applications.

Short-wave infrared (SWIR) is an emerging technology in imaging. It is typically defined as light in the 0.9 – 1.7μm wavelength range, but can also be classified from 0.7 – 2.5μm. Using SWIR wavelengths allows for the imaging of density variations, as well as through obstructions such as fog. However, a normal CCD and CMOS image is not sensitive enough in the infrared to be useful. As such, special indium gallium arsenide (InGaAs) sensors are used. The InGaAs material has a band gap, or energy gap, that makes it useful for generating a photocurrent from infrared energy. These sensors use an array of InGaAs photodiodes, generally in the CMOS sensor architecture. For visible and SWIR comparison images, view What is SWIR?.

The depth of field refers to the range of distances within a scene that appears acceptably sharp in an image. It is about the zone of focus where objects near and far from the camera are rendered in sharp detail. In simple terms, DoF is all about what is in focus within the image. DoF is not measured in degrees but is typically quantified regarding near and far distances from the camera (e.g., feet or meters).

When light from an image falls on a camera sensor, it is collected by a matrix of small potential wells called pixels. The image is divided into these small discrete pixels. The information from these photosites is collected, organized, and transferred to a monitor to be displayed. The pixels may be photodiodes or photocapacitors, for example, which generate a charge proportional to the amount of light incident on that discrete place of the sensor, spatially restricting and storing it. The ability of a pixel to convert an incident photon to charge is specified by its quantum efficiency. For example, if for ten incident photons, four photo-electrons are produced, then the quantum efficiency is 40%. Typical values of quantum efficiency for solid-state imagers are in the range of 30 - 60%. The quantum efficiency depends on wavelength and is not necessarily uniform over the response to light intensity. Spectral response curves often specify the quantum efficiency as a function of wavelength. For more information, see the section of this application note on Spectral Properties.

FOV: the field of view of an optical instrument in degrees. Sensor dimension: the size of the digital camera image sensor, typically measured in millimeters. Focal length: the distance from the lens to the point where parallel rays of light converge when they pass through the lens. 57.3: used to convert radians to degrees.

Cameracompare

The field of view of a security camera refers to the area or angle that the camera can capture and monitor. It is measured in degrees vertically and horizontally. The wider the field of view, the larger the area the camera can capture.

The charge packets are limited to the speed at which they can be transferred, so the charge transfer is responsible for the main CCD drawback of speed, but also leads to the high sensitivity and pixel-to-pixel consistency of the CCD. Since each charge packet sees the same voltage conversion, the CCD is very uniform across its photosensitive sites. The charge transfer also leads to the phenomenon of blooming, wherein charge from one photosensitive site spills over to neighboring sites due to a finite well depth or charge capacity, placing an upper limit on the useful dynamic range of the sensor. This phenomenon manifests itself as the smearing out of bright spots in images from CCD cameras.

Unlike analog cameras where, in most cases, the frame rate is dictated by the display, digital cameras allow for adjustable frame rates. The maximum frame rate for a system depends on the sensor readout speed, the data transfer rate of the interface including cabling, and the number of pixels (amount of data transferred per frame). In some cases, a camera may be run at a higher frame rate by reducing the resolution by binning pixels together or restricting the area of interest. This reduces the amount of data per frame, allowing for more frames to be transferred for a fixed transfer rate. To a good approximation, the exposure time is the inverse of the frame rate. However, there is a finite minimum time between exposures (on the order of hundreds of microseconds) due to the process of resetting pixels and reading out, although many cameras have the ability to readout a frame while exposing the next time (pipelining); this minimum time can often be found on the camera datasheet. For additional information on binning pixels and area of interest, view Imaging Electronics 101: Basics of Digital Camera Settings for Improved Imaging Results.

The term "Field of View (FOV)" can be found in many optical devices and used in multiple scenarios. Here are some practical applications of this concept.

Lenses with short focal lengths have more curved shapes and cause light rays to converge more quickly. As a result, they bring distant objects into focus and capture a wider area within the camera's FOV. In contrast, lenses with long focal lengths have flatter or less curved shapes and cause light rays to converge more slowly. As a result, long focal length lenses capture a narrower FOV.

Furthermore, some security cameras are designed with a wide-angle lens and have a broader FOV suitable for monitoring large areas, like parking lots or open spaces. Others have narrower lenses that are ideal for focusing on specific targets or areas in greater detail.

The distance to the subject can affect the perceived field of view (FOV), but it doesn't directly alter the physical FOV of the camera or lens. Instead, it influences the composition and perspective of the image. If you move closer to the subject, you may need to use a wider-angle lens to encompass the subject within the frame, making the FOV appear wider. Conversely, if you move farther from the subject, you might need to use a longer focal length lens (telephoto) to frame the subject as desired, making the FOV appear narrower.

Tilting the camera up or down (pitch) and panning it left or right (yaw) also affects the FOV. Tilted upward, the FOV may emphasize the sky or tall objects, while tilting downward highlights the ground or low objects.

CMOS cameras have the potential for higher frame rates, as the process of reading out each pixel can be done more quickly than with the charge transfer in a CCD sensor’s shift register. For digital cameras, exposures can be made from tens of seconds to minutes, although the longest exposures are only possible with CCD cameras, which have lower dark currents and noise compared to CMOS. The noise intrinsic to CMOS imagers restricts their useful exposure to only seconds.

The maximum human field of vision, also known as the human visual field, is approximately 200 degrees horizontally and about 135 degrees vertically. This field of vision can vary slightly from person to person due to factors like genetics and individual differences in eye anatomy.

The solid state sensor is based on a photoelectric effect and, as a result, cannot distinguish between colors. There are two types of color CCD cameras: single chip and three-chip. Single chip color CCD cameras offer a common, low-cost imaging solution and use a mosaic (e.g. Bayer) optical filter to separate incoming light into a series of colors. Each color is, then, directed to a different set of pixels (Figure 9a). The precise layout of the mosaic pattern varies between manufacturers. Since more pixels are required to recognize color, single chip color cameras inherently have lower resolution than their monochrome counterparts; the extent of this issue is dependent upon the manufacturer-specific color interpolation algorithm.

The size of a camera sensor's active area is important in determining the system's field of view (FOV). Given a fixed primary magnification (determined by the imaging lens), larger sensors yield greater FOVs. There are several standard area-scan sensor sizes: ¼", 1/3", ½", 1/1.8", 2/3", 1" and 1.2", with larger available (Figure 5). The nomenclature of these standards dates back to the Vidicon vacuum tubes used for television broadcast imagers, so it is important to note that the actual dimensions of the sensors differ. Note: There is no direct connection between the sensor size and its dimensions; it is purely a legacy convention. However, most of these standards maintain a 4:3 (Horizontal: Vertical) dimensional aspect ratio.

The camera orientation can also influence the field of view. When you change the camera's direction from landscape (horizontal) to portrait (vertical) or vice versa, it alters the FOV and the image's composition. In landscape orientation, the FOV is typically broader and suitable for capturing wide scenes. In portrait orientation, the FOV is narrower, which can help focus on a taller subject or emphasize vertical elements in the frame.

1/1.3 inchsensorsize

The shutter speed corresponds to the exposure time of the sensor. The exposure time controls the amount of incident light. Camera blooming (caused by over-exposure) can be controlled by decreasing illumination, or by increasing the shutter speed. Increasing the shutter speed can help in creating snap shots of a dynamic object which may only be sampled 30 times per second (live video).

Knowledge Center/ Application Notes/ Imaging Application Notes/ Imaging Electronics 101: Understanding Camera Sensors for Machine Vision Applications

The field of view determines the area or angle that the camera can capture and monitor. The special FOV of security cameras can vary from one model to another. Some security cameras may have fixed lenses, which provide a constant FOV, while varifocal cameras can zoom in or out to change the FoV according to the user's needs.

It would help if you determined what you intend to capture with the camera. Different purposes may require distinct FOV. For example, if you are looking for a security camera, you need to map out the places you want to monitor and choose between a wide FOV (larger space) or a narrow FOV (rich details).

Three-chip color CCD cameras are designed to solve this resolution problem by using a prism to direct each section of the incident spectrum to a different chip (Figure 9b). More accurate color reproduction is possible, as each point in space of the object has separate RGB intensity values, rather than using an algorithm to determine the color. Three-chip cameras offer extremely high resolutions but have lower light sensitivities and can be costly. In general, special 3CCD lenses are required that are well corrected for color and compensate for the altered optical path and, in the case of C-mount, reduced clear ance for the rear lens protrusion. In the end, the choice of single chip or three-chip comes down to application requirements.

After reading this, do you understand the FOV meaning? Is it important? Let us know your thoughts in the comment section below, and share this article with your family and friends if you find it useful!

Lens aperture doesn't directly affect the field of view, but it will change the composition of different images. The lens aperture significantly affects the depth of field, which is the range of distances in the scene that appears acceptably sharp in the picture. A wider aperture (e.g., a lower f-number like f/1.8) will result in a shallower depth of field. This depth of field usually makes the background and foreground more out of focus. The image appears to have a narrower FOV, even though the FOV itself remains the same.

Ms.Cici

Ms.Cici

8618319014500

8618319014500