Makro-Objektive für Sony Alpha 6700 - makro objektiv e mount

The following image shows the positioning of each component. Each component mentioned in the image is discussed in detail below.

Ethernet is a Local Area Network (LAN) technology that was introduced in 1983 by the Institute for Electrical and Electronic Engineers (IEEE) as the 802.3 standard. IEEE 802.3 defines the specification for physical layer and data link layer’s media access control (MAC). Ethernet is a wired technology that supports twisted pair copper wiring (BASE-T) and fiber optic wiring (BASE-R). The following tables below mention various Ethernet networking technologies based on their speed and cable/transceiver type.

Sports Light Hub. 2286 likes · 3527 talking about this. Covering Nigeria's football landscape. NPFL, NNL and NLO.

Vision Group is a group of the professionals where the corporate and Government organization finds 360 degree solutions for their customize requirements.

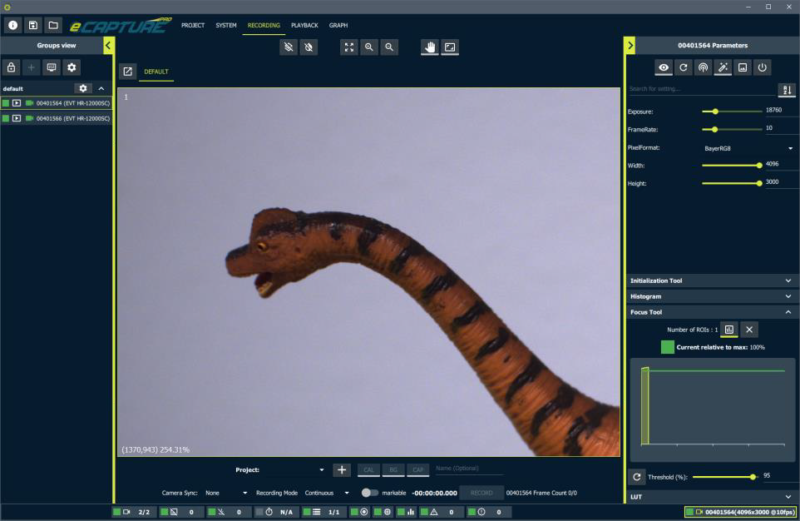

The processor inside a camera is constrained to minimize power consumption and reduce heat generation. As a result, it is limited in its processing capability when it comes to handling very high frame rates and outputting post-processed images at the same time. To post-process large amounts of image data coming from a very high frame rate sensor, it is preferred to send this data to an external system for processing instead of using resources from the camera processor. Various systems used in the post-processing of images are:

Every item we sell on our website comes with free home delivery to anywhere in the United Kingdom - meaning the price you see on the product page is the price you pay at the checkout!

KEYENCE IV4

Each photodiode on a sensor corresponds to a pixel of a digital image. While a photodiode is associated with the analog value, an image pixel is associated with the digital value. A pixel is the smallest element in a digital image and is an abbreviation of ‘picture element’. Resolution, intensity, exposure, gain and frame rate are some of the basic concepts related to digital imaging which are discussed below.

The processor inside a machine vision camera is usually an embedded processor or a field-programmable gate array (FPGA) which runs a model-specific firmware. This firmware is responsible for reading the pixel values from the image sensor, implementing image sensor features, processing pixel data to create a full image, applying image enhancement algorithms and communicating with external devices to output a complete image.

A lens is a device that magnifies a scene by focusing the light entering through it. In simple words, a lens allows the camera to see the outside world clearly. A scene as seen by the camera is regarded as in focus if the edges appear sharp and out of focus if the edges appear blurry. It is important to note here that lenses used in machine vision cameras often have a fixed focus or adjustable focus whereas lenses used in consumer cameras for example DSLR and Point & Shoot cameras have auto-focus. Angle of View (AoV), Field of View (FoV), Object Distance, Focal Length, Aperture and F-Stop are some of the terms often used when categorizing lenses. Below is a brief explanation of these terms:

Even though the main component of every machine vision system is a camera, no machine vision system is complete without all of the following components:

Introduction: the photographic lens. A simple plano-convex lens has one surface plane and the other spherical. Its focal surface is also approximately ...

While a machine vision camera is responsible for capturing an image and sending it to the host PC, imaging software running on the host PC is responsible for:

基 恩 士

When manufacturers quote the linear FoV they are referring to metres per 1,000 metres, like 131 metres per 1,000 metres – it means that at 1,000 metres distance you can see 131 metres from side to side.

In order to make sure the camera is able to capture all the details required for analysis, machine vision systems need to be equipped with proper lighting. Various techniques for engineering lighting in a machine vision system are available based on the position, angle, reflective nature and color spectrum of the light source. These are:

CoaXPress(CXP) is a point‐to‐point communication standard for transmitting data over a coaxial cable. The CoaXPress standard was first unveiled at the 2008 Vision Show in Stuttgart and is currently maintained by the JIIA (Japan Industrial Imaging Association). Below table mentions various CXP standards.

That’s it, in a nutshell as we said at the beginning, no fancy technical terms to cloud your mind. Just simple straight to the point explanations.

Keyence lens

If you see angular FoV on the description followed by something like 6° that means you can see 6 degrees width through the lenses. The higher the angle quoted depicts a wider field of view. To convert the degrees into metres per 1,000 metres just multiply the number of degrees by 17.5. This is because 1° is equal to roughly 17.5 metres. So to convert 8° into metres per 1,000 metres just multiply by that amount to calculate the linear FoV.

2. Macro lens – Macro lenses are designed to achieve high magnifications and they generally work in the magnification range of .05x to 10x.

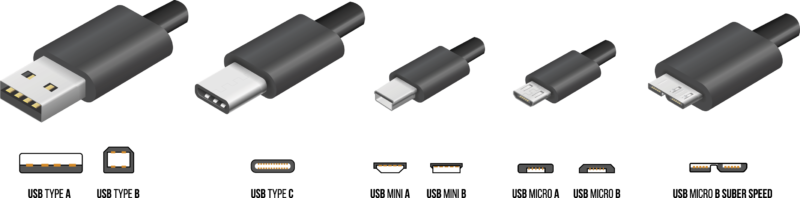

Universal Serial Bus (USB) standard was first released in 1996 and is maintained by the USB Implementers Forum (USB-IF). USB was designed to standardize the connection of peripherals to personal computers. USB can both communicate and supply electric power to peripherals such as keyboards, mouse, video cameras, and printers. The below table mentions various USB standards and their speeds.

When a camera captures an image of an object, what it’s really doing is capturing the light that the object has reflected. The degree to which light is absorbed or reflected is dependent on the object’s surface whether it is transparent, translucent or opaque.

1. Entocentric / Endocentric lens – These lenses come with a fixed focal length and are the most common lenses used in machine vision cameras.

A protective case that contains a lens mount, an image sensor, a processor, power electronics and a communication interface is what is referred to as a camera in machine vision.

It’s all about your positioning really, if you are too close to, or too far away from the eyepiece, your FoV will be affected. You might see the FoV slightly shaded or shadowed, or “clipped” this is called vignetting. So to get a clear image and to see the full FoV, you need to have the correct eye relief.

The field of view is stated by the manufacturers in a couple of ways. Binoculars, spotting scopes and rangefinders measure the FoV in metres per 1,000 metres, but rifle scopes are measured in feet per 100 yards. Binocular manufacturers either use metres per 1,000 metres or as a degree.

LJ-X8000

Each component in a machine vision system plays an important role in fulfilling the overall purpose of the system which is to help machines make better decisions by looking at the outside world. Fulfillment of this purpose requires orderly positioning of the components such that the flow of information starting from capturing of light to delivering and processing a digital image can be facilitated.

This was for a ceiling light over a home bar. I compared this versus the 11.5W (1100 lumens / "75W incandescent equivalent") version, and they both work. The ...

When you buy products from us, you can rest assured that you'll always get the most up-to-date version of the product you're purchasing. This is because items get sent direct from the manufacturer and we do not hold old stock - giving you the latest equipment at a low price!

There is a direct relationship between the FoV and the level of magnification. It works like this, the higher the magnification, the lower the FoV. It’s fairly obvious when you think about it, as you close in on the object, you lose a great deal of the surrounding features.

2024925 — Partner Event: Asia New Vision Forum 2024 ... A high-level international business leadership forum organized by Caixin, the second edition of the ...

Cognex

In a nutshell the field of view (FoV) is what you can see through the lens from edge to edge. So that’s everything you can see through the lens from left to right and everywhere in between. But as with most things, there’s more to it than that. There’s the eyepiece, position of the lenses, how thick they are, the way they’re assembled and the magnification, all contribute to how the FoV comes together. So let’s get into the FoV of binoculars.

FoV at 1,000 yards is the linear measurement of the Field of View, it is usually expressed as either feet per 1,000 yards or as the angular measurement in degrees. So 315 feet at 1,000 yards means that you can see a field of view of 315 feet wide at a distance of 1,000 yards.

20221116 — If a wave has only 1 value of amplitude over an infinite length, it is perfectly spatially coherent. The range of separation between the two ...

Camera Link is a high bandwidth protocol built for parallel communication. It standardizes the connection between cameras and frame grabbers. Camera Link High-Speed (CLHS) evolved from Camera Link and was first introduced in 2012. It delivers low-latency, low-jitter, real-time signals between a camera and a frame grabber and can carry both the image and configuration data using for both copper and fiber cabling.

KEYENCE

We offer a no-nonsense lifetime guarantee on every item we sell (excluding Night Vision and Thermal Imaging products). If for any reason your product has any defect, we will repair or replace it completely free!

For linearly polarized light, the plane of polarization is defined as a plane paral- lel both to the direction of oscillation of the electric field vector and ...

Pretty much every feature to do with optics are linked to other features in some way. It sounds pretty complicated but once you get your head around it, it all becomes clear.

Put simply, eye relief is the correct distance between your eyes and the eyepiece. Having the correct eye relief will allow you to see the whole image and the full FoV. It is always given by the binocular manufacturer and the best eye relief is somewhere between 14 to 18mm.

At the core of any camera lies an image sensor that converts incoming light (photons) into electrical signals (electrons). An image sensor is comprised of exposing arrays called “photodiodes” which act as a potential well where the electromagnetic energy from photons is converted into micro-voltage. This voltage is then passed to an Analogue-to-Digital Converter (ADC) which outputs a digital value. Image sensors available in the market can be categorized based on the physical structure (CCD/CMOS), pixel dimensions (Area Scan/Line Scan), chroma type (Color/Mono), shutter type (Global/Rolling), the light spectrum (UV/SWIR/NIR) and polarization of light.

Canatu's optical filters can support many different EUV and X-ray applications including EUV mask inspection, X-Ray astronomy, microscopy, e-beam filtration, ...

The meaning of OBJECTIVE LENS is a lens or system of lenses in a microscope, telescope, etc., that forms an image of an object.

Opto Engineering provides machine vision lighting products designed to satisfy industrial automation applications such as LED illuminators and pattern ...

Ms.Cici

Ms.Cici

8618319014500

8618319014500