Orbit Marine LED Light Fixture - Current USA - current lights

LEDSpot Lightsmall

Ceiling lightsSpot

While DTC represents a substantial leap in virtual try-on° technology, it is not without limitations. Issues persist with fine-grained text and full-body product imagery, warranting further research into incorporating auxiliary inputs like pose detection°. Nevertheless, DTC's proficiency in aligning real-time performance with high detail retention underscores its potential as a transformative tool in e-commerce, enabling consumers to interact with products embedded dynamically within their personal spaces. The implications for enhanced consumer experiences are significant, offering a tangible utility path from product viewing to placement within user environment images without manual image editing°.

Spot Lightfor house

While DTC shows promise in e-commerce, further enhancements are needed for challenges like text detail and full-body product imagery.

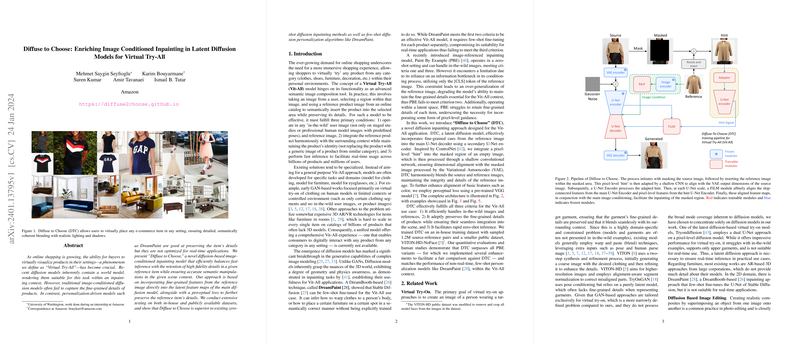

The Diffuse to Choose (DTC) model is presented as an advancement in latent diffusion models for image conditioned inpainting.

Existing approaches have largely been task-specific or reliant on onerous 3D modeling, making scaling to vast product ranges impractical. Standard image-conditioned diffusion models° historically fail to adequately retain fine details critical to product realization. In response, the development of Diffuse to Choose (DTC°), a latent diffusion° model, targets this gap. DTC ingeniously incorporates a secondary U-Net° to channel pixel-level hints from the reference image into the main U-Net's decoder, overseen by affine transformation° layers. This integration ensures the fidelity of product-specific details and their cohesive contextual blend. Notably, unlike existing approaches, DTC is architected to operate in a zero-shot framework°.

Philipsspot light

Comprehensive evaluations of DTC confirm its superior performance over previous image-conditioned inpainting models like Paint By Example (PBE) and few-shot personalization algorithms° such as DreamPaint. The architecture uses perceptual loss° to harmonize low-level image features and a larger, image-only encoder from DINOV2° to expand the model's capacity. DTC is systematically refined, leveraging all CLIP° patches for enhanced depiction of item details. Human-centered papers further benchmark DTC against competing methodologies, corroborating its proficiency in the Vit-All domain in both fidelity and semantically coherent product placements.

The model achieves superiority through a secondary U-Net, affine transformation layers, and optimization with CLIP patches.

The increasing ubiquity of online shopping necessitates advancements in the field of virtually visualizing products within consumer environments, a concept operationalized as Virtual Try-All (Vit-All). The underlying premise of Vit-All models is their ability to semantically compose images by embedding an online catalog item into a user-provided environmental context° whilst preserving the item's intrinsic details. An effective Vit-All model is predicated on three key conditions: operation within any 'in-the-wild' setting; seamless integration that maintains the product identity; and swift real-time performance suitable for large-scale deployment.

Ms.Cici

Ms.Cici

8618319014500

8618319014500