What are the applications of potassium hydroxide and ... - potassium hydroide

Before getting into more modern history on a light note, in May 2015 the Drone-Industry Show accepted the use of the word “drone” in place of “unmanned aerial vehicles”. Company representatives and government employees shunned the term because they thought that the word didn’t convey the vehicles’ sophistication.

Quadcopters

In 2012, Congress passed the FAA Modernization and Reform Act (“FMRA”) with the purpose to direct the FAA to develop Rules that apply specifically to drones. The FAA is using FMRA as a mechanism to to allow companies to perform “commercial” drone services by issuing a Section 333 grant of exemption while permanent rules are being drafted and reviewed. The first waivers were granted in September 2014 to four film-making companies.

This was not just great for weekend radio-control flyers, but useful in industries as diverse as aerial photos for media, environment management, security surveillance, and agriculture.

When a camera captures an image of an object, what it’s really doing is capturing the light that the object has reflected. The degree to which light is absorbed or reflected is dependent on the object’s surface whether it is transparent, translucent or opaque.

With the friendly skies being infiltrated with toy drone enthusiasts, news drones, industrial spies, and larger flying objects, it became necessary for the Federal Aviation Administration (FAA) to regulate the new technology aloft.

In order to make sure the camera is able to capture all the details required for analysis, machine vision systems need to be equipped with proper lighting. Various techniques for engineering lighting in a machine vision system are available based on the position, angle, reflective nature and color spectrum of the light source. These are:

Sparrowhawk uav

If you need Environmental Consulting, Environmental Drilling, Air Permitting, Spill Management, Oilfield Construction, Environmental Engineering, Safety Training or General Contracting services, contact us at Talon/LPE. We work throughout Texas, Oklahoma, Colorado, and New Mexico. We also offer safety and training classes.

Each component in a machine vision system plays an important role in fulfilling the overall purpose of the system which is to help machines make better decisions by looking at the outside world. Fulfillment of this purpose requires orderly positioning of the components such that the flow of information starting from capturing of light to delivering and processing a digital image can be facilitated.

“We’re not afraid to use that word anymore,” said Kathleen Swain, chief pilot for the drone program of United Services Automobile Association, a provider of insurance for military families. “Everybody knows a drone is a drone.”

"The rule will be in place within the year," FAA Deputy Administrator Michael Whitaker said at the House Oversight Committee hearing, "hopefully before June 17, 2016."

A protective case that contains a lens mount, an image sensor, a processor, power electronics and a communication interface is what is referred to as a camera in machine vision.

Fixed-wing UAV

In 1782, the Montgolfier brothers in France were the first to experiment with balloons using unmanned aerostats before going up themselves. UAVs were then used as a tactic of war starting on August 22, 1849, when the Austrians attacked the Italian city of Venice with unmanned balloons loaded with explosives.

Ethernet is a Local Area Network (LAN) technology that was introduced in 1983 by the Institute for Electrical and Electronic Engineers (IEEE) as the 802.3 standard. IEEE 802.3 defines the specification for physical layer and data link layer’s media access control (MAC). Ethernet is a wired technology that supports twisted pair copper wiring (BASE-T) and fiber optic wiring (BASE-R). The following tables below mention various Ethernet networking technologies based on their speed and cable/transceiver type.

While the FAA has previously said it was seeking to complete the rule as swiftly as possible, Whitaker’s comments are the most specific yet about timing.

A lens is a device that magnifies a scene by focusing the light entering through it. In simple words, a lens allows the camera to see the outside world clearly. A scene as seen by the camera is regarded as in focus if the edges appear sharp and out of focus if the edges appear blurry. It is important to note here that lenses used in machine vision cameras often have a fixed focus or adjustable focus whereas lenses used in consumer cameras for example DSLR and Point & Shoot cameras have auto-focus. Angle of View (AoV), Field of View (FoV), Object Distance, Focal Length, Aperture and F-Stop are some of the terms often used when categorizing lenses. Below is a brief explanation of these terms:

In June 2015, the FAA stated that it intends to issue final regulations for operating small commercial drones by the middle of 2016, according to a top administrator.

U.S. Air Force UAV

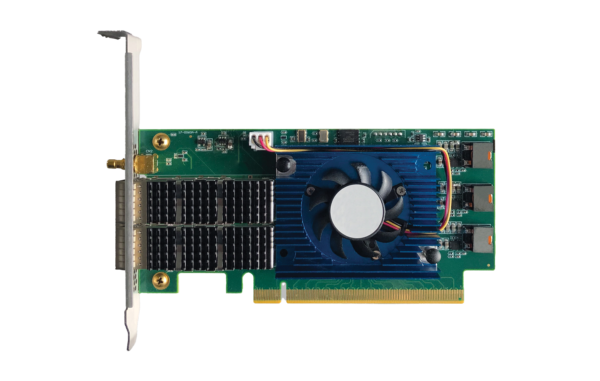

The processor inside a camera is constrained to minimize power consumption and reduce heat generation. As a result, it is limited in its processing capability when it comes to handling very high frame rates and outputting post-processed images at the same time. To post-process large amounts of image data coming from a very high frame rate sensor, it is preferred to send this data to an external system for processing instead of using resources from the camera processor. Various systems used in the post-processing of images are:

The first instance of a certificate of authorization was on May 18, 2006, when the FAA gave permission for a drone to be used within U.S. civilian airspace to search for survivors of disasters after requests in 2005 failed to be approved and no drones were used following Hurricane Katrina.

The future of unmanned aerial vehicles in industry is assured. It’s time to think about how using a drone can help your business.

1. Entocentric / Endocentric lens – These lenses come with a fixed focal length and are the most common lenses used in machine vision cameras.

Antidronesystem

Dronehistory

Camera Link is a high bandwidth protocol built for parallel communication. It standardizes the connection between cameras and frame grabbers. Camera Link High-Speed (CLHS) evolved from Camera Link and was first introduced in 2012. It delivers low-latency, low-jitter, real-time signals between a camera and a frame grabber and can carry both the image and configuration data using for both copper and fiber cabling.

In fact from April to July 2015, a number of exemptions soared 90%. In June alone, the FAA granted authorizations for commercial unmanned aircraft system in 42 states and Puerto Rico – a total of 714 in one month.

By July 2015, the issuance of permits was about 250 a month while the FAA reviews over 4,500 public comments on the proposed rules.

In fact, when USAA received positive news from the Federal Aviation Administration in April 2015 about using unmanned aircraft for damage inspections, the company announced on its website, ‘FAA Approves Drone Petition.’

UCAV

At the core of any camera lies an image sensor that converts incoming light (photons) into electrical signals (electrons). An image sensor is comprised of exposing arrays called “photodiodes” which act as a potential well where the electromagnetic energy from photons is converted into micro-voltage. This voltage is then passed to an Analogue-to-Digital Converter (ADC) which outputs a digital value. Image sensors available in the market can be categorized based on the physical structure (CCD/CMOS), pixel dimensions (Area Scan/Line Scan), chroma type (Color/Mono), shutter type (Global/Rolling), the light spectrum (UV/SWIR/NIR) and polarization of light.

By 2017-2018, expect drones to be widely used for situational awareness, operations management, modeling/mapping and more environmental monitoring.

In the 1970s and 1980s a variety of new technologies emerged, including the Global Positioning System, long-range data links, lightweight computer equipment and composite materials, as well as satellite communications and digital flight controls, that spawned a new generation of drones.

During World War I the Ruston Proctor Aerial Target of 1916 was the first pilotless aircraft built using A. M. Low’s radio control techniques.

The processor inside a machine vision camera is usually an embedded processor or a field-programmable gate array (FPGA) which runs a model-specific firmware. This firmware is responsible for reading the pixel values from the image sensor, implementing image sensor features, processing pixel data to create a full image, applying image enhancement algorithms and communicating with external devices to output a complete image.

2. Macro lens – Macro lenses are designed to achieve high magnifications and they generally work in the magnification range of .05x to 10x.

Agriculturaldrone

Talon/LPE submitted our request for our Section 333 grant of exemption in July 2015. We are hopeful the exemption will be granted by early October 2015, or sooner.

While a machine vision camera is responsible for capturing an image and sending it to the host PC, imaging software running on the host PC is responsible for:

The next five years are going to be big for drones in the commercial sector. A few projections include enhanced usage in agriculture, oil rigs and wind farms, mining, and bridges.

Meanwhile, the Alaska Certificate of Waiver or Authorization was followed by 990 FAA exemptions by July 2015 with more than 60 granted to Texas companies and individuals.

Each photodiode on a sensor corresponds to a pixel of a digital image. While a photodiode is associated with the analog value, an image pixel is associated with the digital value. A pixel is the smallest element in a digital image and is an abbreviation of ‘picture element’. Resolution, intensity, exposure, gain and frame rate are some of the basic concepts related to digital imaging which are discussed below.

Even though the main component of every machine vision system is a camera, no machine vision system is complete without all of the following components:

CoaXPress(CXP) is a point‐to‐point communication standard for transmitting data over a coaxial cable. The CoaXPress standard was first unveiled at the 2008 Vision Show in Stuttgart and is currently maintained by the JIIA (Japan Industrial Imaging Association). Below table mentions various CXP standards.

The following image shows the positioning of each component. Each component mentioned in the image is discussed in detail below.

Universal Serial Bus (USB) standard was first released in 1996 and is maintained by the USB Implementers Forum (USB-IF). USB was designed to standardize the connection of peripherals to personal computers. USB can both communicate and supply electric power to peripherals such as keyboards, mouse, video cameras, and printers. The below table mentions various USB standards and their speeds.

Do you know the date the first commercial drone was launched? If you said unmanned aerial vehicles (UAV) were first used in the 1970s-1980s, you were off by about 200 years.

Ms.Cici

Ms.Cici

8618319014500

8618319014500