Industrial Camera | TOSHIBA TELI CORPORATION - industrial camera

In photography, the term macro refers to extreme close-ups. Macro lenses are normal to long-focus lenses capable of focusing on extremely close subjects, thereby rendering large reproductions. The magnification ratio or magnification factor is the size of the subject projected onto the image sensor in comparison to its actual size. A macro lens’ magnification ratio is calculated at its closest focusing distance. A true macro lens is capable of achieving a magnification ratio of 1:1 or higher. Lenses with magnification ratios from 2:1 to 10:1 are called super macro. Ratios over 10:1 cross over into the field of microscopy. When shopping for a macro lens, keep in mind that in the context of kit lenses and point-and-shoot cameras, some manufacturers use the macro moniker as marketing shorthand for “close-up photography.” These products do not achieve 1:1 magnification ratios. When in doubt, check the technical specifications.

Industrialmachine vision

Beyond portraiture, long-focus lenses are useful for isolating subjects in busy and crowded environments. Photojournalists, wedding, and sports photographers exploit this ability regularly. Due to their magnifying power, super telephoto lenses are a mainstay for wildlife and nature photographers. Lastly, long-focus lenses are frequently used by landscape photographers to capture distant vistas or to isolate a feature from its surroundings.

The constant angle of view of a prime lens forces this type of experimentation—“zooming with your feet”—because the other options are either bad pictures or no pictures. Furthermore, restricting yourself to a single focal length for an extended period of time acquaints you to its angle of view and allows you to visualize a composition before raising the camera to your face.

Cognex scanner

Let’s see how these discrete elements work together when evaluating a product in a manufacturing operation — which is a typical example of an automated system in practice.

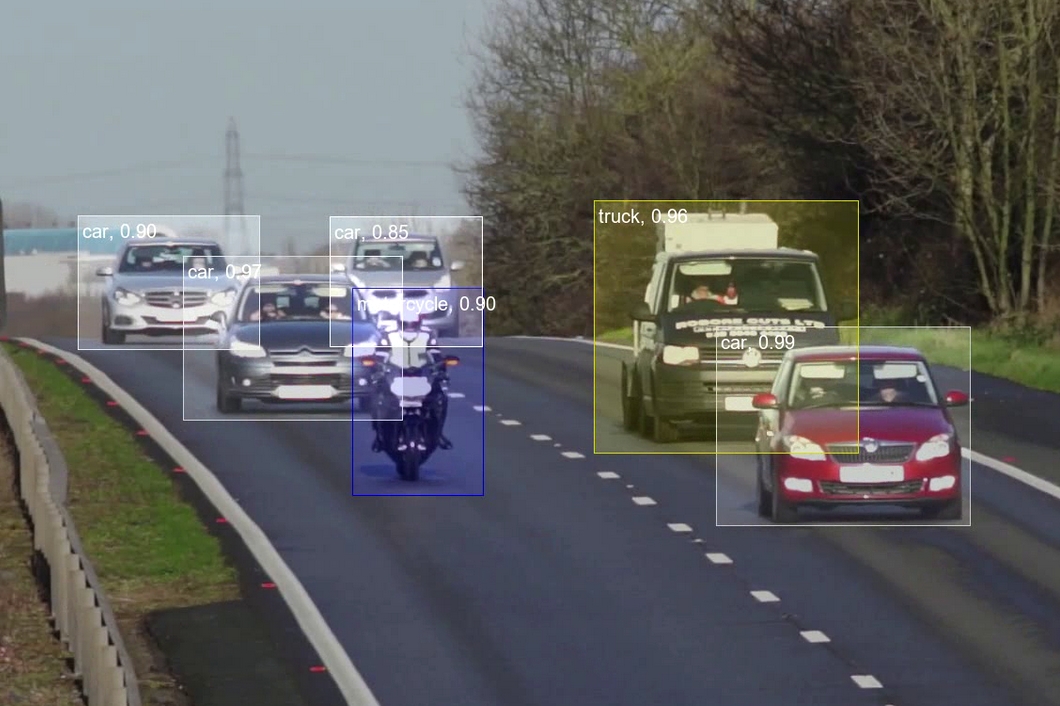

For example, computer vision can process images or videos online, along with images from motion detectors, infrared sensors, or other sources. The next megatrend is Edge AI, moving computer vision from the cloud to the edge, close to the sensor gathering data. Machine vision is a sub-class of computer vision.

For instance, on full-frame cameras, whose image sensors measure 36×24 mm, the diagonal length is approximately 43 mm, and yet, the 50 mm lens is conventionally considered normal. On APS‑C cameras (24 × 16 mm), whose diagonal spans about 28 mm, a 35 mm focal length is regarded as normal primarily because its angle of view is similar to the 50 mm lens on the full-frame format. Therefore, normal focal lengths will differ as a function of the camera’s image sensor size. In fact, as you continue reading, keep in mind that descriptive terms such as “ultra-wide,” “short,” “long,” et cetera, implicitly refer to the angle of view of a lens.

Machine vision is the ability of a computer to see the environment. For example, a video camera runs with analog-to-digital conversion and digital screen processing. The image data is then sent to a computer or robot controller.

Understanding the anatomy and capabilities of systems can help guarantee that an application is suitable for camera-based systems. In reality, a camera can capture whatever a human eye can see, and deciphering and reporting the data can be a little daunting. However, with the help of a vendor knowledgeable in the systems, lighting, and techniques, we can save a significant amount of time in the long run.

As you have learned in the section on apertures and f‑numbers, “an increase in focal length decreases the intensity of light reaching the image sensor.” This relationship is most obvious in zoom lenses. A “variable” aperture zoom lens is a lens whose maximum aperture becomes smaller with increased focal length. These types of zoom lenses are simple to spot because they list a maximum aperture range instead of a single number. The range specifies the maximum aperture for the shortest and longest focal lengths of the zoom range. Variable aperture lenses are the most common type of zoom lens. A constant aperture or “fixed” aperture zoom lens is one whose maximum aperture remains constant across the entire zoom range. Fixed aperture lenses are typically more massive and more expensive than their variable aperture counterparts. They are also more straightforward to work with when practicing manual exposure at the maximum aperture since no compensation for lost light is required during zooming.

When selecting the right machine vision camera, you have to consider several factors. Initially, it is essential to ensure the camera you’ve chosen is suitable for the application you are looking for. For instance, a camera used for robot guidance won’t have the same characteristics as the one used for production control. The choice of any feature will depend on both your budget and your application.

Machine vision cameras are based on sensors with special optics to capture images, process, evaluate, and measure different characteristics through hardware and computer software for precise decision-making. If engineered with the correct optics and resolution, a machine vision camera can detect small object details almost invisible to the human eye.

Due to their ability to magnify distance objects, long-focus lenses present photographers with many uses. They are almost universally lauded for portraiture because their narrow angle of view allows for a higher magnification of the subject from conventionally more pleasing perspectives. As a rule of thumb, a desirable focal length for a portrait lens starts at twice the normal focal length for the camera system (about 85 mm for full-frame and 56 mm for APS‑C).

A machine vision system uses a sensor in the robot to see and identify a physical entity using a computer. Various industrial processes, including recognition of optical characters, material inspection, currency recognition, object recognition, pattern recognition, and electronic component analysis, use this system.

A “normal” lens is defined as one whose focal length is equal to the approximate diagonal length of a camera’s image sensor. In practice, such lenses tend to fall into a range of slightly longer focal lengths that are claimed to possess an angle of view comparable to that of the human eye’s cone of visual attention, which is about 55°.

Machine vision systems are embedded components that use data extorted from online images to automatically guide manufacturing and production operations, such as go/no testing and quality control processes. These systems can also play a role in inspection operations and automated assembly verification. They can do so using their capabilities to direct material handling equipment to position products or materials required in a particular process.

Computervisiontutorial

A prime or fixed focal length lens has a set focal length that cannot be changed. There are several critical differences between prime and zoom lenses that you should know. Prime lenses are generally smaller, faster, and have better optical characteristics than zoom lenses. Despite this, photographers frequently opt to shoot with zoom lenses because of their convenience: a single lens can replace several of the most popular focal length prime lenses. This is especially important when you’d prefer to pack light, such as during a trip or a hike.

Computer vision and machine vision are overlapping technologies. An intelligent system needs a computer and a particular software tool to function, while computer vision doesn’t need to be linked to a machine.

The machine vision algorithms can analyze vast agricultural fields to identify areas that are affected by pests or diseases in real time with the help of aerial drones. This would help the farmers target interventions precisely where needed, reducing the application of blanket pesticides and minimizing the environmental impact.

Wide-angle lenses represent the only practical method of capturing a scene whose essential elements would otherwise fall outside the angle of view of a normal lens. Conventional subjects of ultra wide-angle lenses include architecture (especially interiors), landscapes, seascapes, cityscapes, astrophotography, and the entire domain of underwater photography. Wide-angle lenses are often used for photojournalism, street photography, automotive, some sports, and niche portraiture.

A zoom lens allows photographers to vary its effective focal length through a specified range, which alters the angle of view and magnification of the image. Zoom lenses are described by stating their focal length range from the shortest to longest, such as 24–70 mm and 70–200 mm. The focal length range of a zoom lens directly correlates to its zoom ratio, which is derived by dividing the longest focal length by the shortest. Both of the lenses above have a zoom ratio of approximately 2.9x, or 2.9:1. The zoom ratio also describes the amount of subject magnification a single lens can achieve across its available focal length range.

The angle of view describes the breadth, or how much, of a scene is captured by the lens and projected onto your camera’s image sensor. It’s expressed in degrees of arc and measured diagonally along the image sensor. Thus, the angle of view of any lens of a given focal length will change depending on the size of the camera’s image sensor. For example, a 50 mm lens has a wide angle of view on a medium format camera, a normal angle of view on a full-frame camera, a narrower angle of view on an APS‑C camera, and a narrow angle of view on a Micro Four-Thirds camera.

If you’re into math—and who isn’t?—the general formula for calculating the angle of view when you know the focal length and the sensor size is:

Machine vision systems include distinct elements or may be combined into a single unit, such as a smart camera that combines the functions of the discrete components into a single package. Irrespective of whether it is using a distinct or integrated system, the efficiency of the intelligent system also depends on the nature of the parts getting analyzed. The more effective the orientation or placement of the component is, the better the system’s performance.

Computervision

About us: Viso.ai provides the leading end-to-end Infrastructure for Computer Vision Applications. The Viso Suite platform enables industry leaders to build, deploy, and scale high-performance computer vision applications. Get a demo for your company.

Machine vision plays an important role in transforming autonomous vehicles. A multitude of sensors, including cameras, are used by vehicles to perceive their surroundings, interpret road signs, recognize pedestrians, and navigate safely. The Machine Vision algorithms process the data from the sensors thereby enabling autonomous vehicles to make quick decisions, avoid collisions, and enhance transportation systems. With advancements, these vehicles can also reduce accidents and congestion, reshaping the future of mobility.

The major components of an intelligent camera system are lighting, sensors, communications systems, lenses, and vision processing systems. The camera sensor converts the light into an online digital image. It is then sent to the processor for analysis.

The purpose of the system in location applications is to find the physical entity and detect its position and orientation. In an inspection application, a system validates certain features, including the presence or absence of the correct label on a bottle or chocolates in a box. In identification applications, the purpose of a vision system is to read different codes and alphanumeric characteristics.

Lenses with an angle of view of 35° or narrower are considered long-focus lenses. This translates to a focal length of about 70 mm and greater on full-frame cameras, and about 45 mm and longer on APS‑C cameras. It’s common for photographers to (incorrectly) refer to long-focus lenses as “telephoto” lenses. A true telephoto lens is one whose indicated focal length is longer than the physical length of its body. Due to this ubiquitous misuse of the word, there exists a further classification of long-focus lenses whose angle of view is 10° or narrower called “super telephoto” lenses (equal to or greater than 250 mm on full-frame cameras and 165 mm on APS‑C cameras). Fortunately, super telephoto lenses are more often than not actual telephoto designs. A great example is the Canon EF 800 mm f/5.6L IS USM Lens, which is only 461 mm long.

There are two types of wide-angle lenses, rectilinear and fisheye (sometimes termed curvilinear). The vast majority of wide-angle lens—and other focal lengths, too—are rectilinear. These types of lenses are designed to render the straight elements found in a scene as straight lines on the projected image. Despite this, wide-angle rectilinear lenses cause rendered objects to progressively stretch and enlarge as they approach the edges of the frame. In photography, all fisheye lenses are ultra wide-angle lenses that produce images featuring strong convex curvature. Fisheye lenses render the straight elements of a scene with a strong curvature about the centre of the frame (the lens axis). The effect is similar to looking through a door’s peephole, or the convex safety mirrors commonly placed at the blind corners of indoor parking lots and hospital corridors. Only straight lines that intersect with the lens axis will be rendered as straight in images captured by fisheye lenses.

In general, a short focal length—or short focus, or “wide-angle”—lens is one whose angle of view is 65° or greater. Recall from above that angle of view is determined by both focal length and image sensor size, which means that what qualifies as “short” is predicated upon a camera’s image sensor format. Therefore, on full-frame cameras, the threshold for wide-angle lenses is 35 mm or less, and on APS‑C cameras, it’s 23 mm or less. Lenses with an angle of view of 85° or greater are called “ultra wide-angle,” which is about 24 mm or less on full-frame and 16mm or less on APS‑C cameras.

Cognex

One of the easiest ways to understand a computer vision system is to consider it the eyes of a machine. This system improves quality, efficiency, and operations.

The focal length of a lens determines its magnifying power, which is the apparent size of your subject as projected onto the focal plane where your image sensor resides. A longer focal length corresponds to greater magnifying power and a larger rendition of your subject, and vice versa.

It’s important to understand that the degree to which the focal length magnifies an object does not depend on your camera or the size of its image sensor. Assuming a fixed subject and subject distance, every lens of the same focal length will project an image of your subject at the same scale. For example, if a 35 mm lens casts a 1.2 cm image of a person, that image will remain 1.2 cm high regardless of your camera’s sensor format. However, on a Micro Four Thirds format camera, the image of that person will fill the height of the frame, whereas it will occupy half the height of a full-frame image sensor, and about one-third the height of a medium format image sensor. As you progress from a smaller sensor to a larger one, the 1.2 cm high projection of the person remains unchanged, but it occupies a smaller part of the total frame. Therefore, although the absolute size of the image will stay constant across varying image sensor formats, its size in proportion to each image sensor format will be different.

Human eyes can sense the range of electromagnetic (EM) wavelengths from 390 to 770 nm. However, video cameras are responsive to wavelengths much broader than this, with some machine vision systems operating at X-ray, infrared, or ultraviolet (UV) wavelengths. Machine vision supports a computer’s ability to see.

Machine vision cameras are used in various industries, including pharmaceuticals, industrial manufacturing, semiconductors, food and beverages, electronics, automotive, and packaging and printing. Moreover, it has a lot of applications in pattern recognition, location analysis, and inspection with cameras.

Viso Suite is the leading end to end computer vision infrastructure to build, deploy, and scale AI vision dramatically faster and better.

The relationship between the angle of view and a lens’s focal length is roughly inversely proportional from 50mm and up on a full-frame camera. However, as the focal length grows increasingly shorter than 50mm, that rough proportionality breaks down, and the rate of change in the angle of view slows. For example, the change in angle of view from 100mm to 50mm is more pronounced than the change from 28mm to 14mm.

Edge Intelligence, or Edge AI, is the next big trend, moving machine learning for image recognition from the cloud to physical edge devices connected to the cameras. This is relevant for the future of machine vision applications since advances in edge computing (on-device processing) make it possible to apply deep learning capabilities in traditional computer vision tasks. For example, deep learning machine vision is useful for camera inspection systems and vision systems for quality control.

If you want to learn more about how Deep Learning brought great advances in the field of Computer Vision, I recommend you to check out the following articles.

These systems have a wide range of applications in different industries that can automate time-consuming, repetitive jobs that would otherwise be tiring to a human operator. The use of such systems allows for the examination of products or components in a process. It results in higher yields, increased quality, low defect rates, reduced costs, and higher consistency of process results.

A true zoom lens, known as a parfocal lens, maintains a set focus distance across its entire focal length range. In the days before digital photography—before electronic autofocus, even—it was common practice to focus a zoom lens at its longest focal length before taking the picture at the desired (if different) focal length. This technique is no longer possible because contemporary variable focal length lenses designed for photography are almost exclusively varifocal lenses, which do not maintain set focus across their zoom range. In practice, most photographers do not know the difference because the autofocus algorithms in their cameras compensate for the slight variations.

In photography, the most essential characteristic of a lens is its focal length, which is a measurement that describes how much of the scene in front of you can be captured by the camera. Technically, the focal length is the distance between the secondary principal point (commonly and incorrectly called the optical centre) and the rear focal point, where subjects at infinity come into focus. The focal length of a lens determines two interrelated characteristics: magnification and angle of view.

Machine Vision can enhance the diagnostic abilities of radiologists. It can precisely detect diseases and anomalies within medical images. It can also quickly and accurately analyze vast datasets, such as MRI or CT scans helping medical professionals to make critical decisions about patient treatment plans and care. This has opened up new avenues for early disease detection and customized medicines.

It’s important to recognize that the convenience and flexibility of zoom lenses can inspire lazy photography. The ease of changing the angle of view encourages photographers to settle on compositions that are good-enough, instead of seeking out better perspectives and gaining a deeper understanding of their subjects. Whatever lens you have, be it zoom or prime, it’s vital for the development of good photography to consider your subject from several perspectives by walking towards, stepping away, and circling around them.

Machine vision

Machine vision systems, also called vision inspection systems or automated vision systems, include many parts commonly found in most systems. Even though each of these parts has its function, they have a particular role in an intelligent system when working together.

Subject size is directly proportional to the focal length of the lens. For example, if you photograph a soccer player kicking a ball, then switch to a lens that is twice the focal length of the first, the rendered size of every element in your image, from the person to the ball, will be doubled in size along the linear dimensions.

Machine vision systems are designed to find defects in manufactured products, assess their dimensional precision, and ensure component integrity. These systems depend on advanced image processing algorithms and HD cameras to analyze products in real-time, ensuring that they stick to stringent quality standards. Such applications reduce production costs, minimize waste, and improve product quality.

For any given camera system, normal lenses are generally the “fastest” available. Adjectives such as “fast” and “slow” always describe lens speed, which refers to a lens’ maximum aperture opening. For instance, a lens with a ƒ/2 or larger aperture is generally considered fast; a lens with a ƒ/5.6 or smaller aperture is deemed to be slow. How is speed relevant to aperture? Recall the reciprocity law: larger apertures permit more light into the camera, thereby allowing you to use faster shutter speeds, and vice versa.

Ms.Cici

Ms.Cici

8618319014500

8618319014500